Dataloco

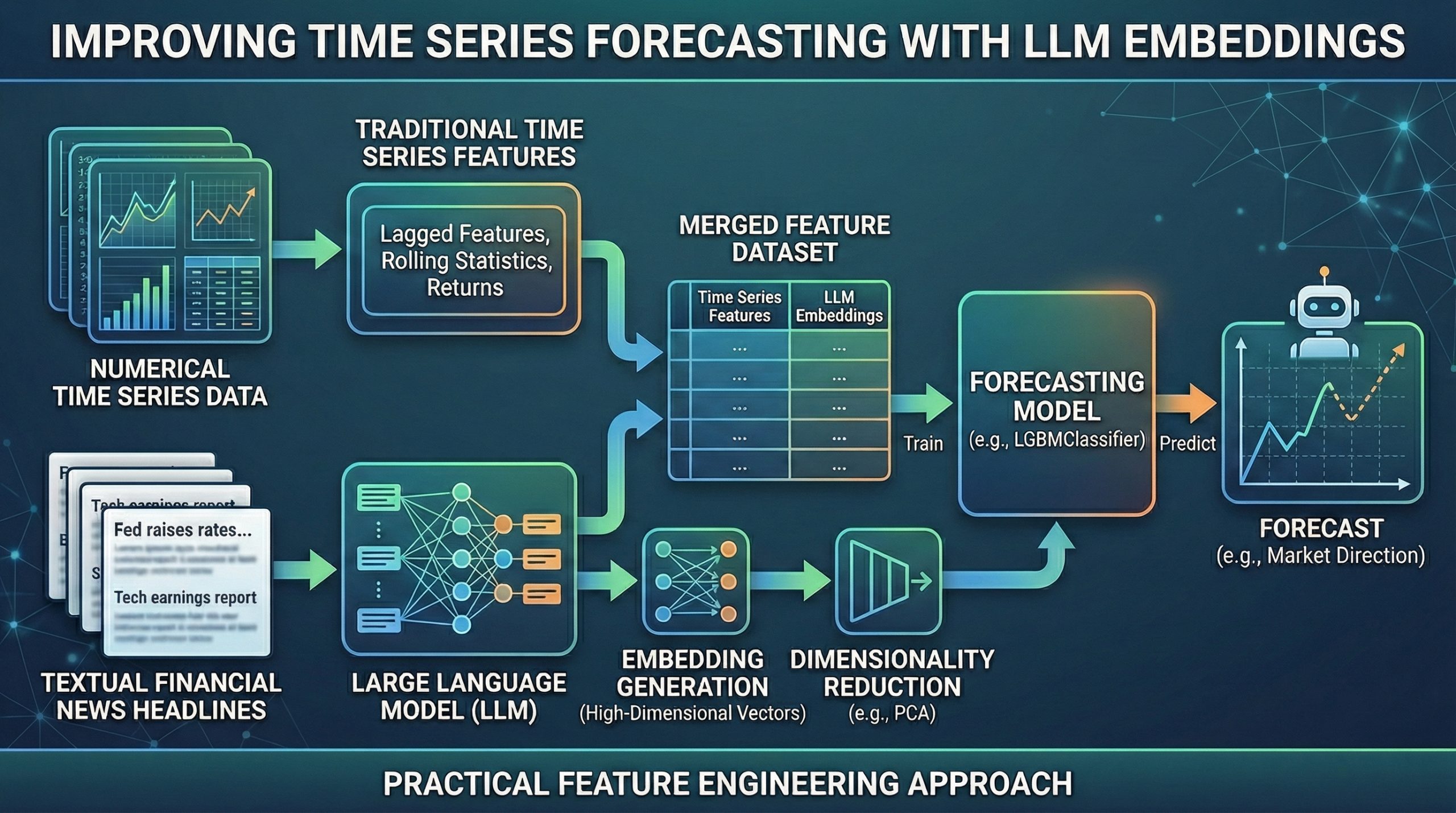

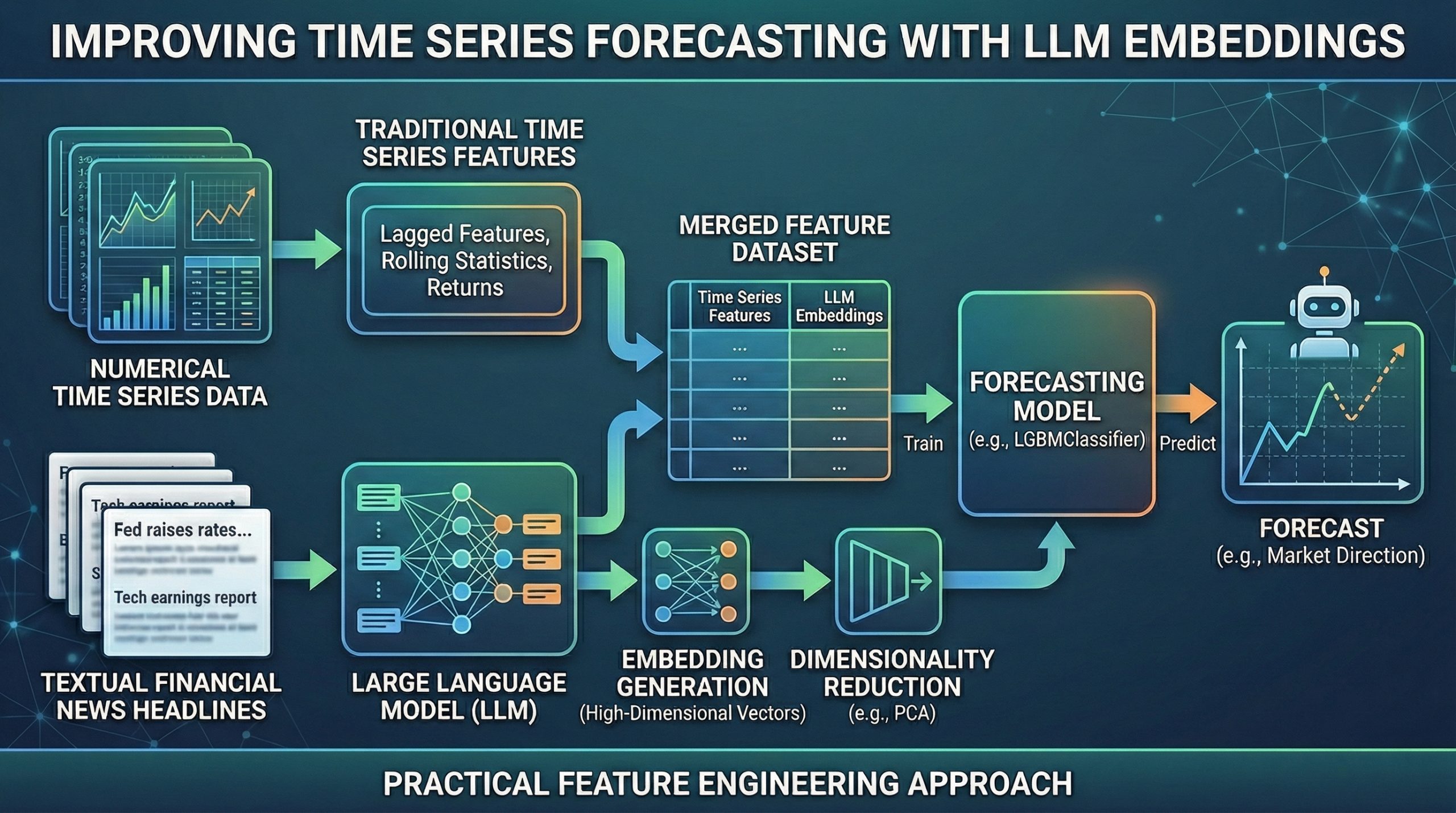

Can LLM Embeddings Improve Time Series Forecasting? A Practical Feature Engineering Approach

Using large language models (LLMs) — or their outputs, for that matter — for all kinds of machine learning-driven tasks, including predictive ones that were already being solved long before language models emerged, has become something of a trend.

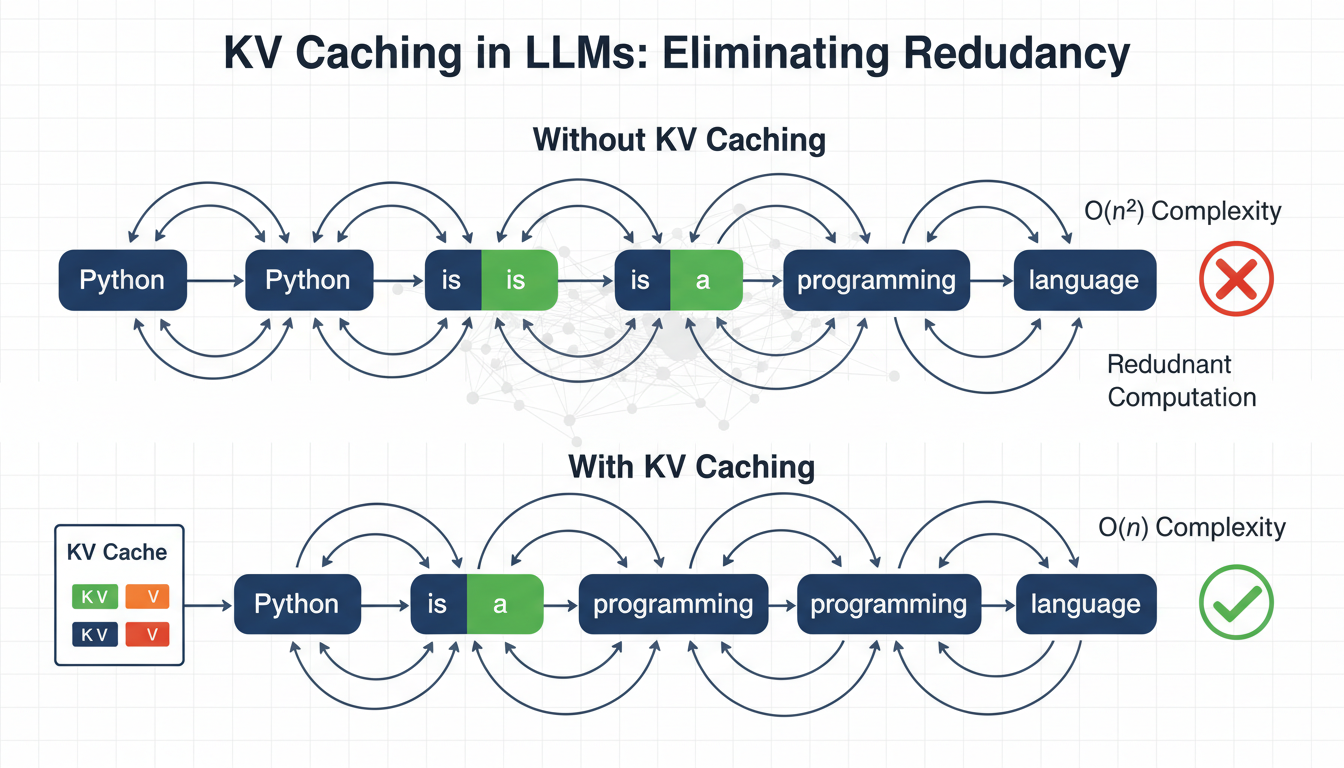

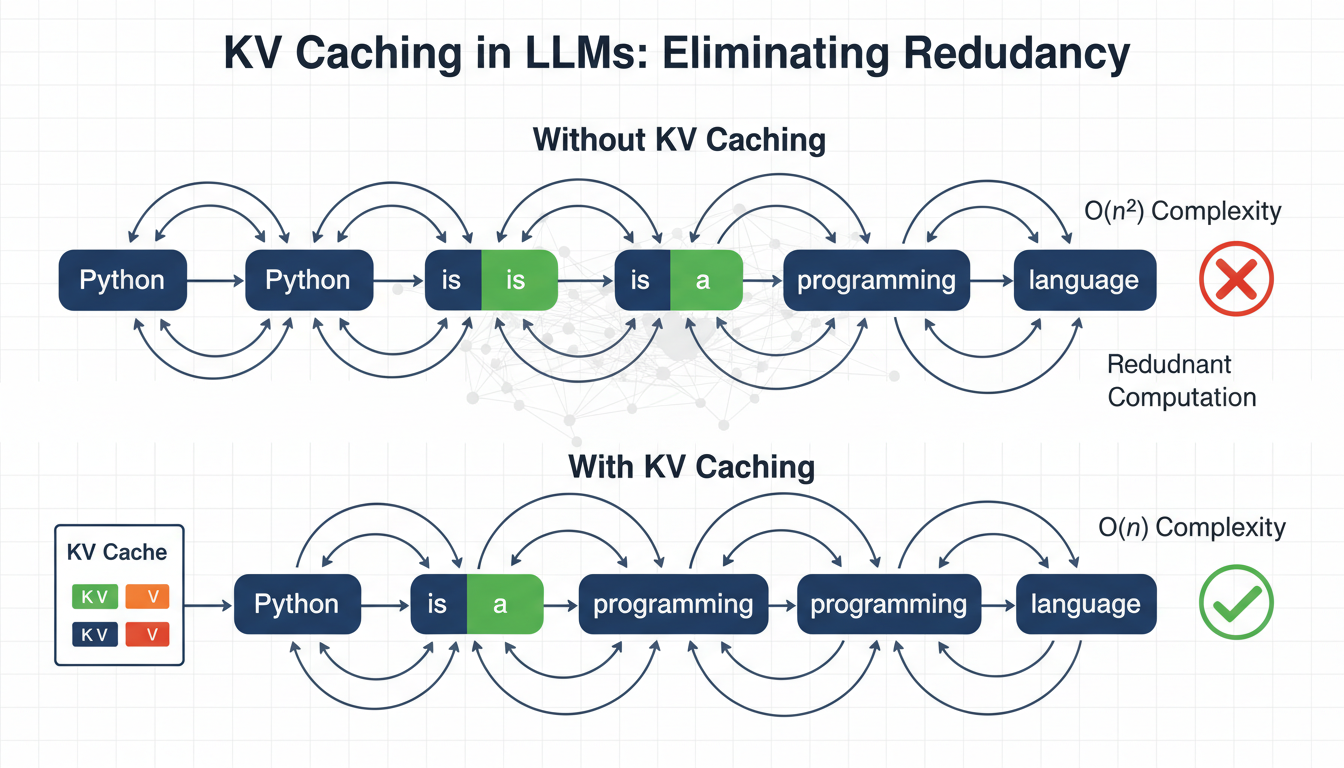

KV Caching in LLMs: A Guide for Developers

Language models generate text one token at a time, reprocessing the entire sequence at each step.

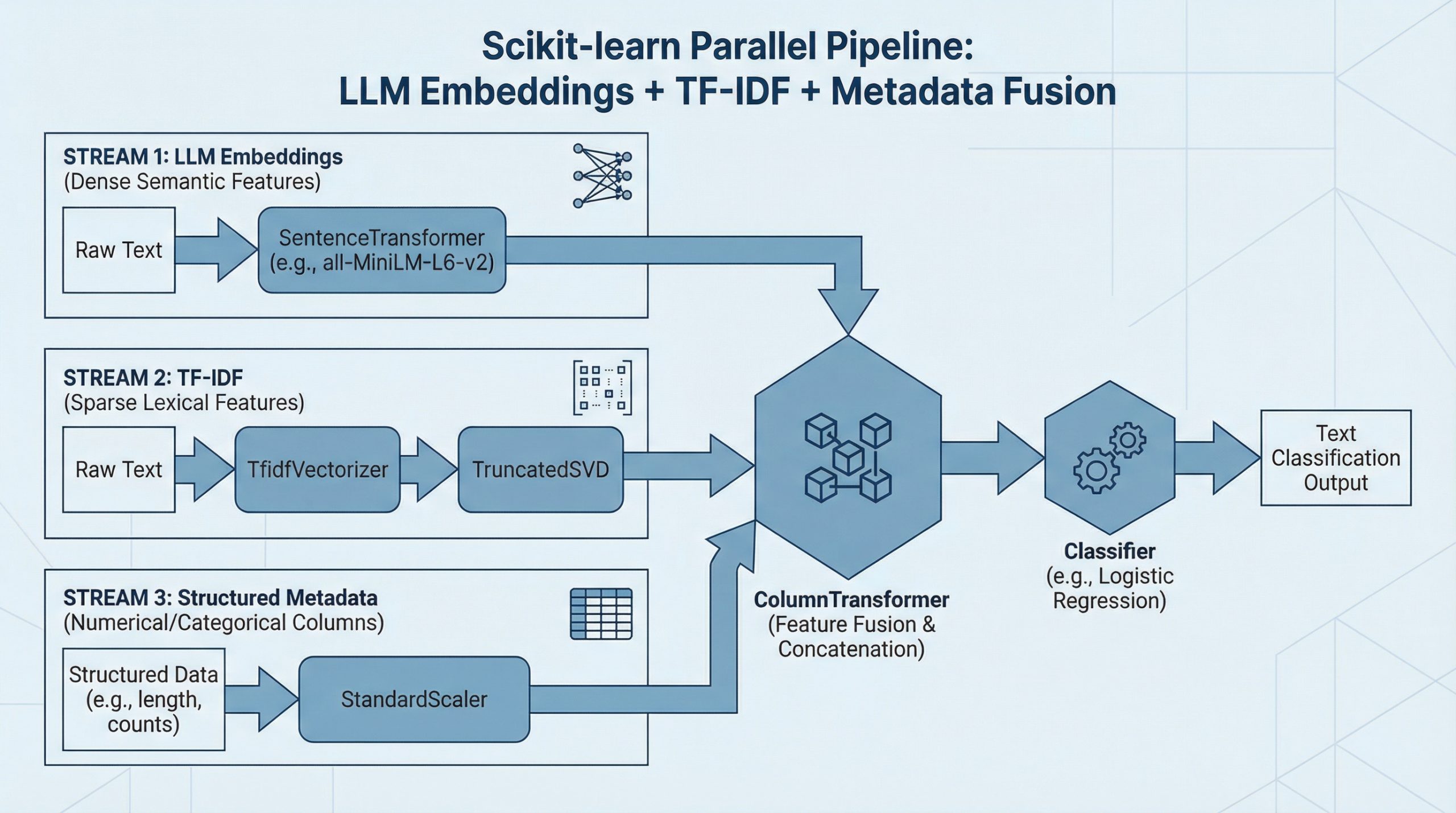

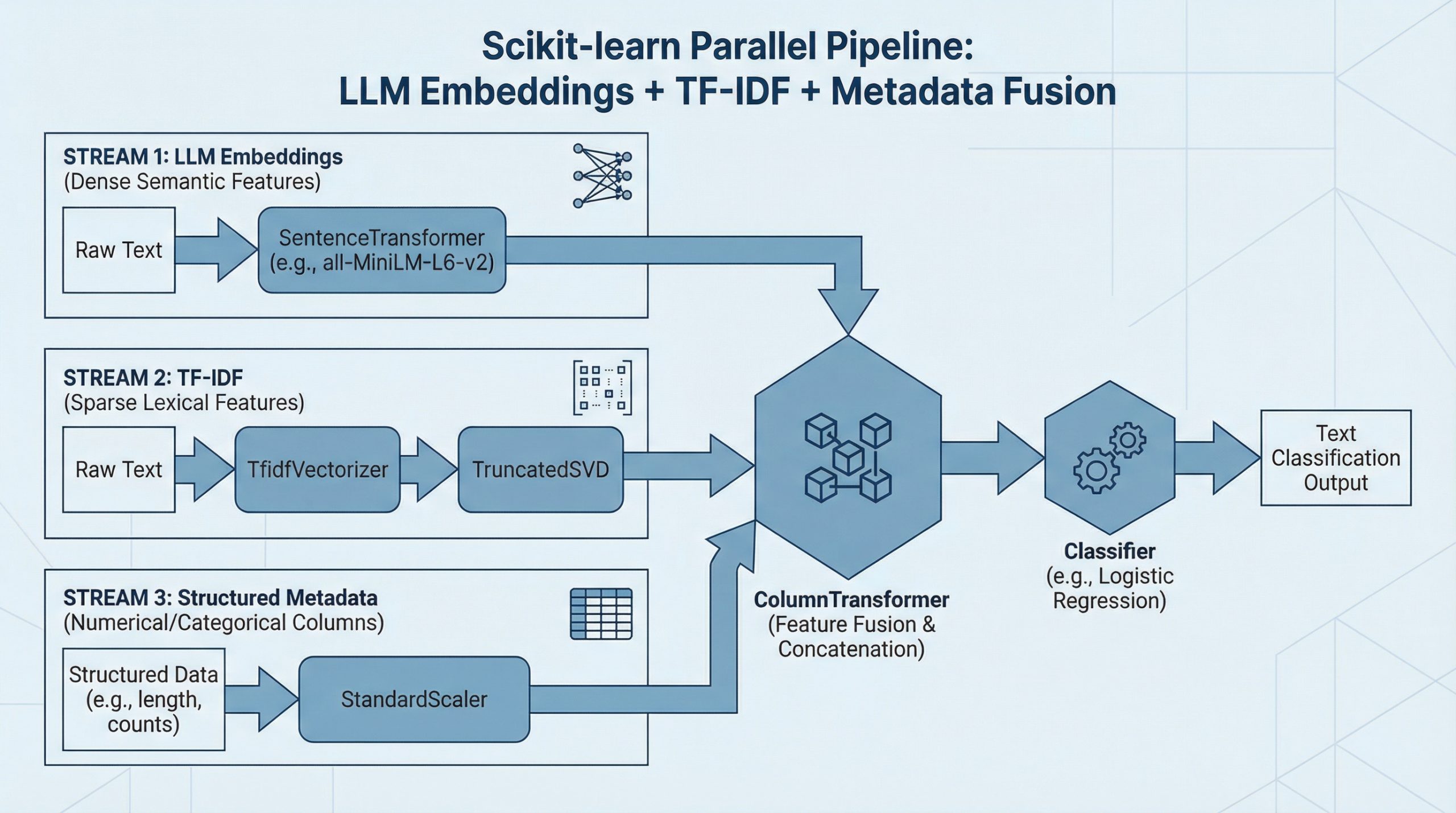

How to Combine LLM Embeddings + TF-IDF + Metadata in One Scikit-learn Pipeline

Data fusion , or combining diverse pieces of data into a single pipeline, sounds ambitious enough.

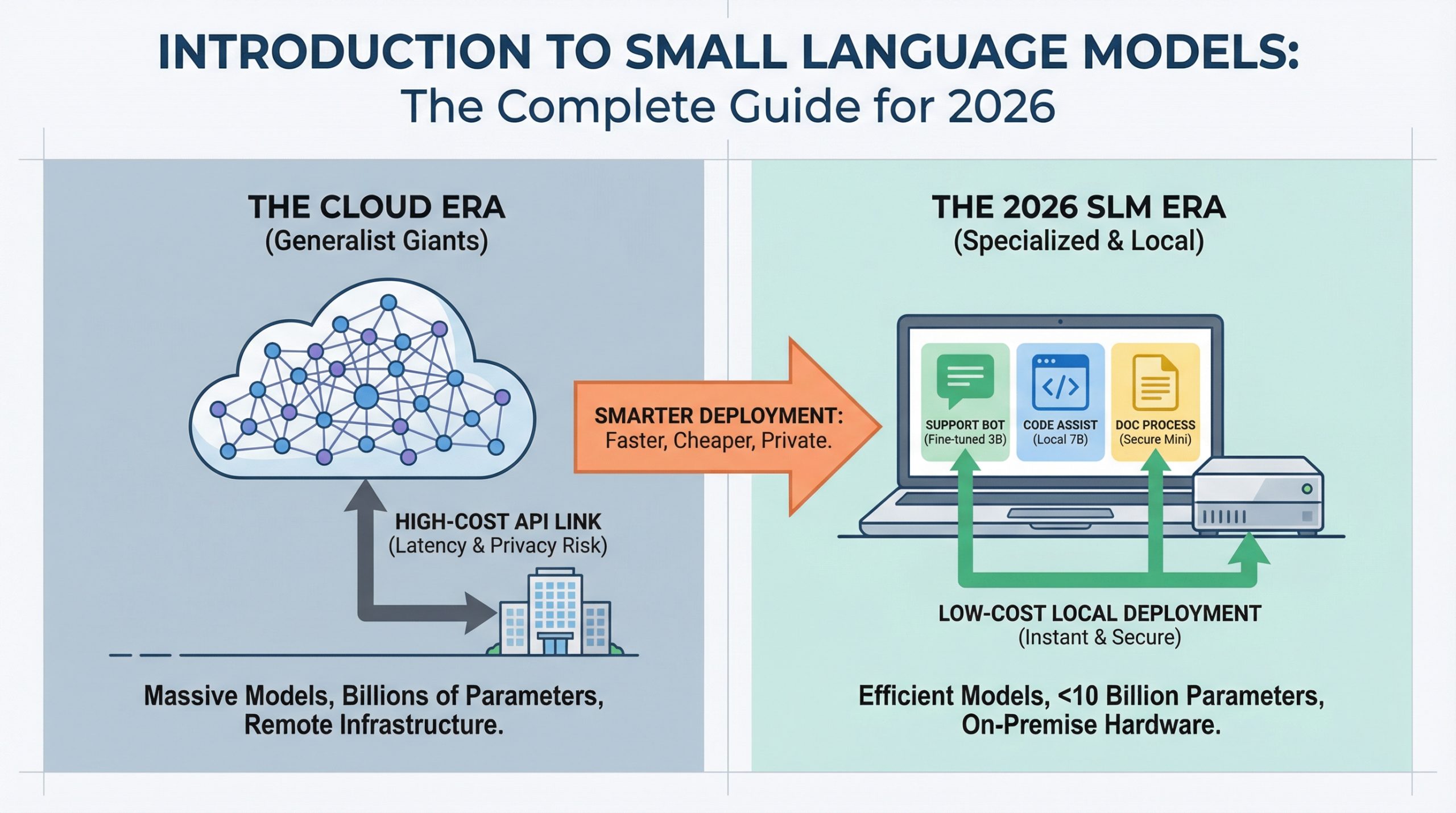

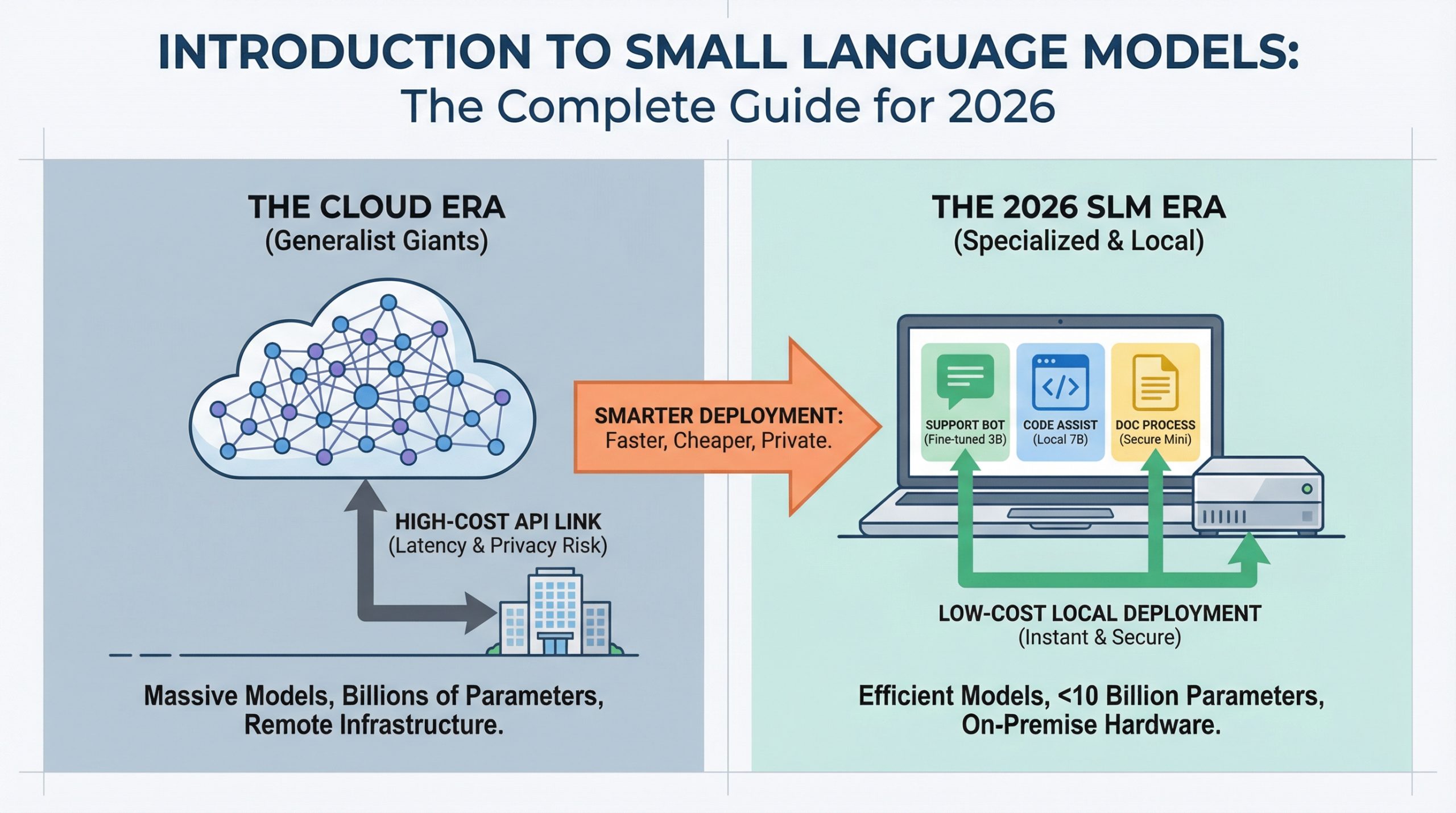

Introduction to Small Language Models: The Complete Guide for 2026

AI deployment is changing.

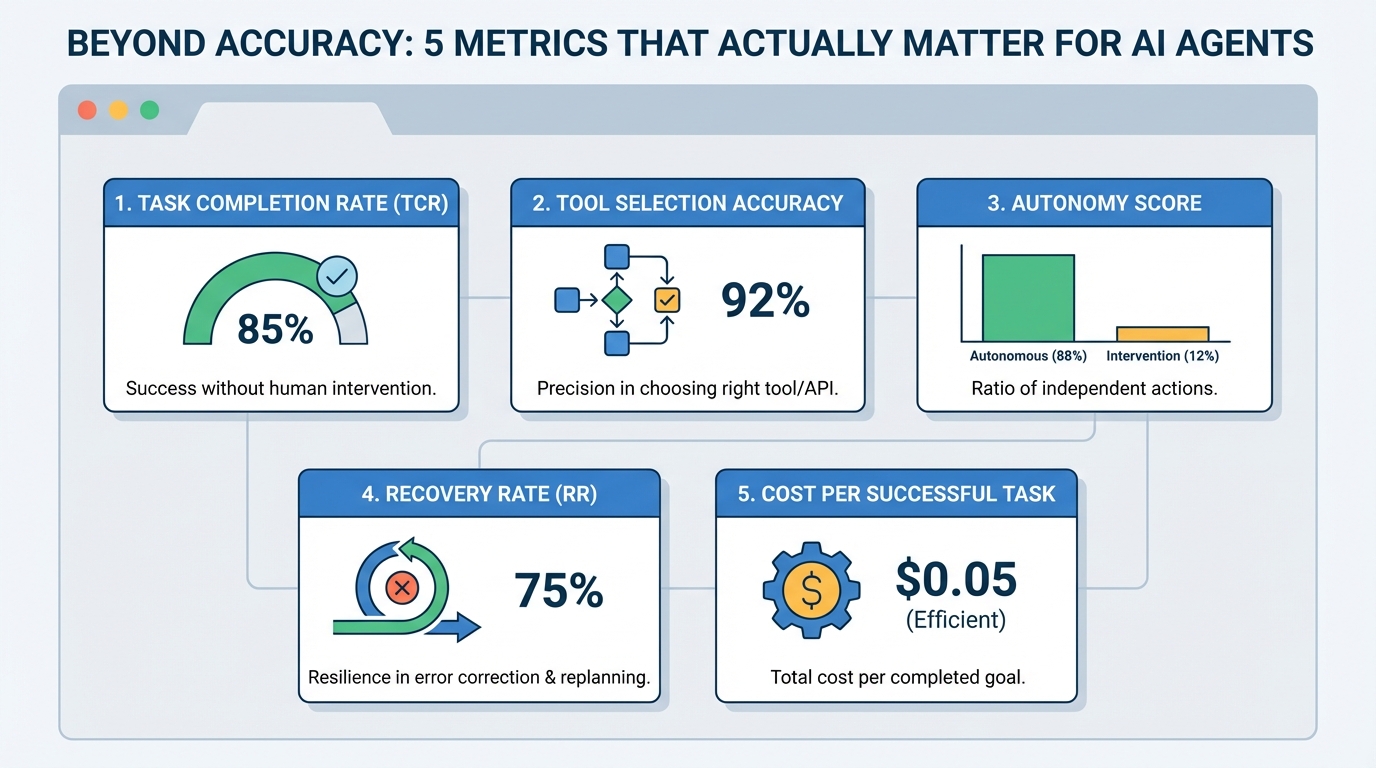

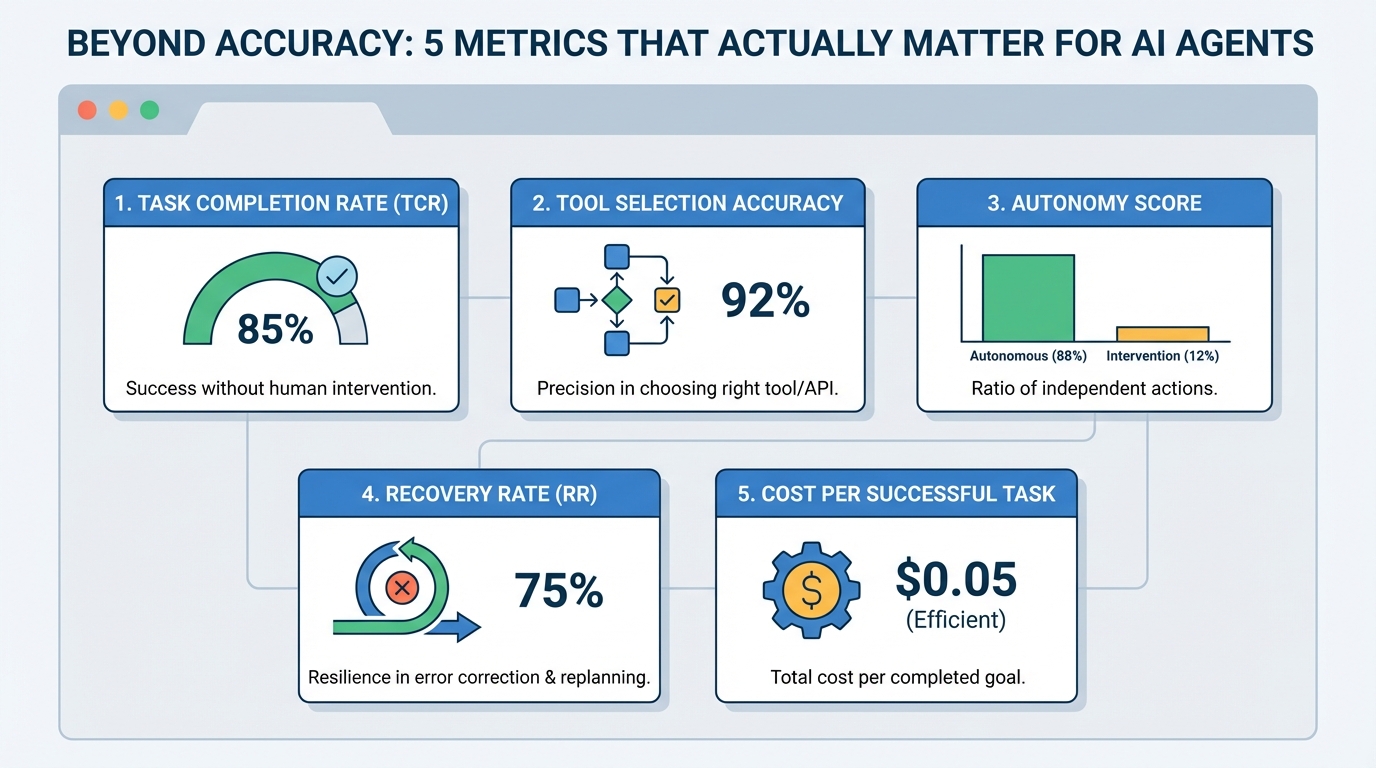

Beyond Accuracy: 5 Metrics That Actually Matter for AI Agents

AI agents , or autonomous systems powered by agentic AI, have reshaped the current landscape of AI systems and deployments.

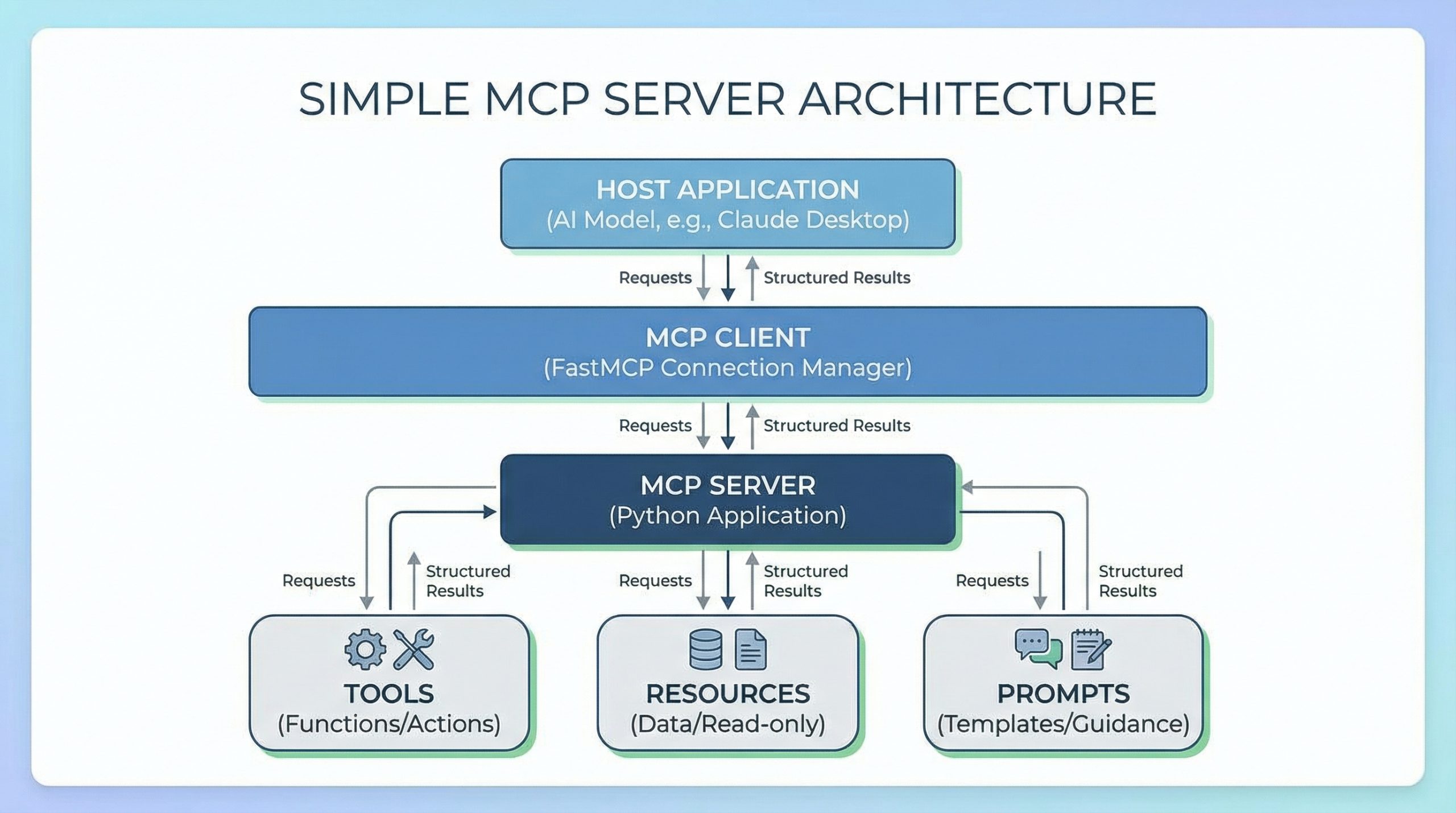

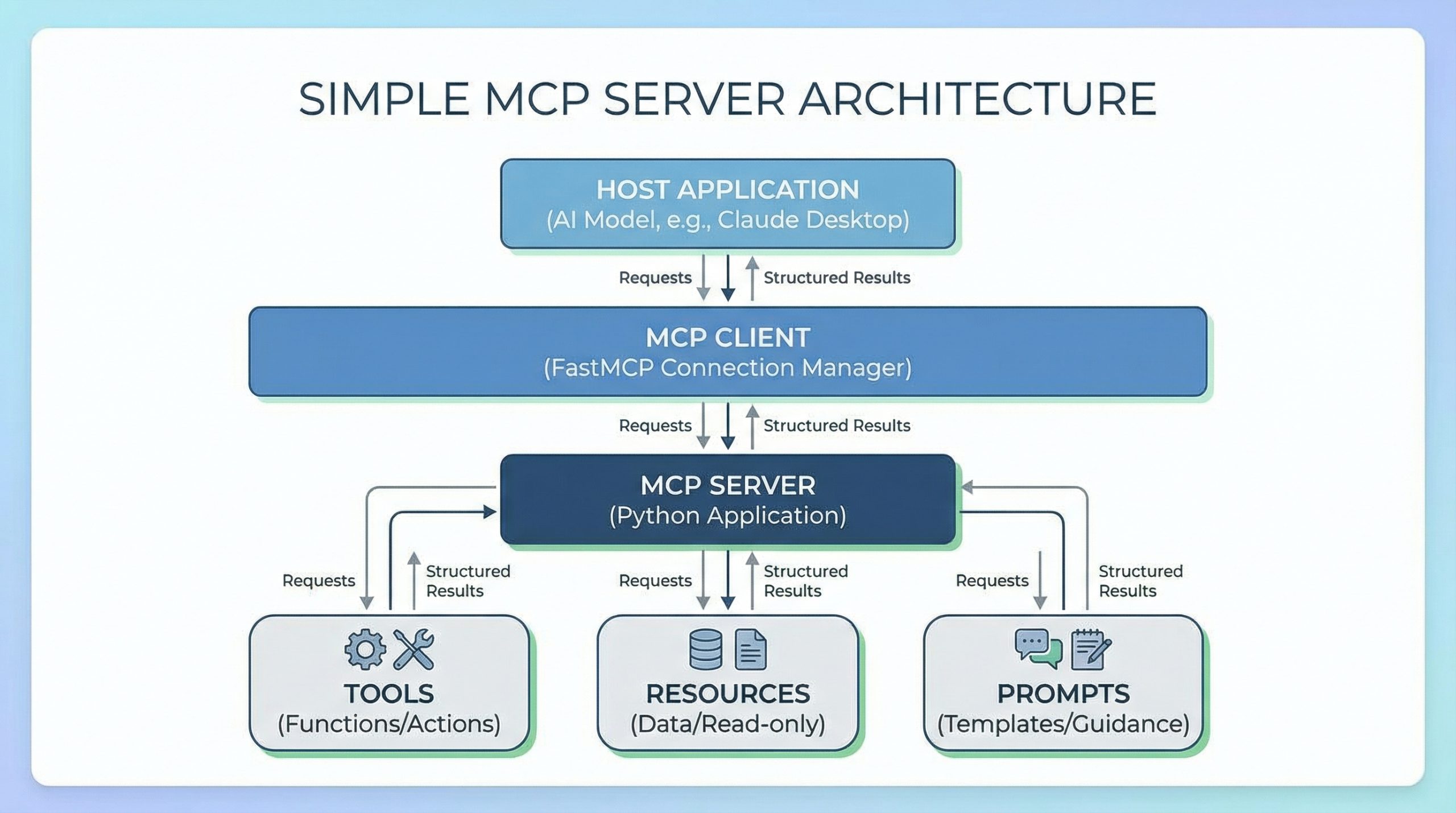

Building a Simple MCP Server in Python

Have you ever tried connecting a language model to your own data or tools? If so, you know it often means writing custom integrations, managing API schemas, and wrestling with authentication.

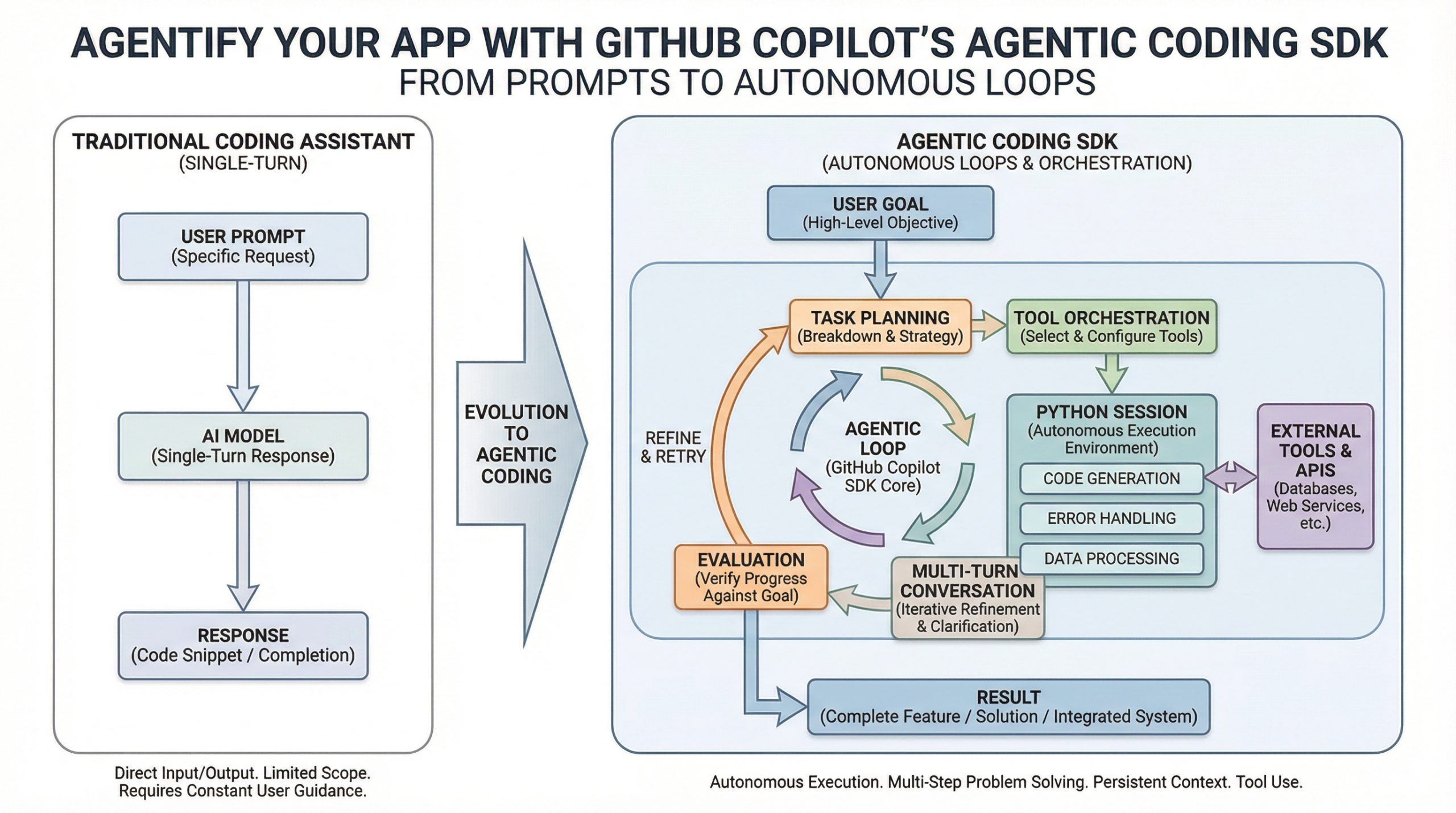

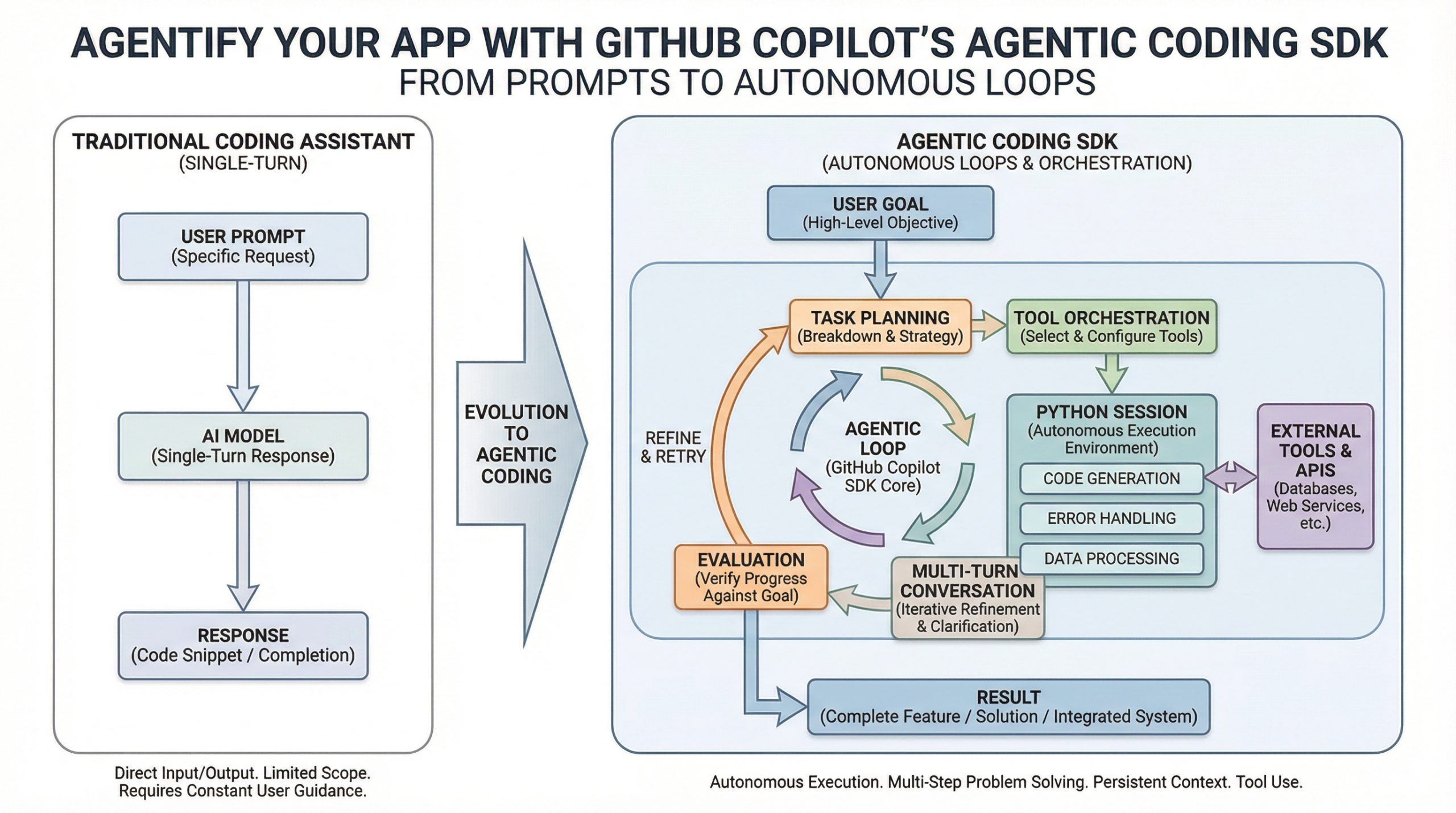

Agentify Your App with GitHub Copilot’s Agentic Coding SDK

For years, GitHub Copilot has served as a powerful pair programming tool for programmers, suggesting the next line of code.

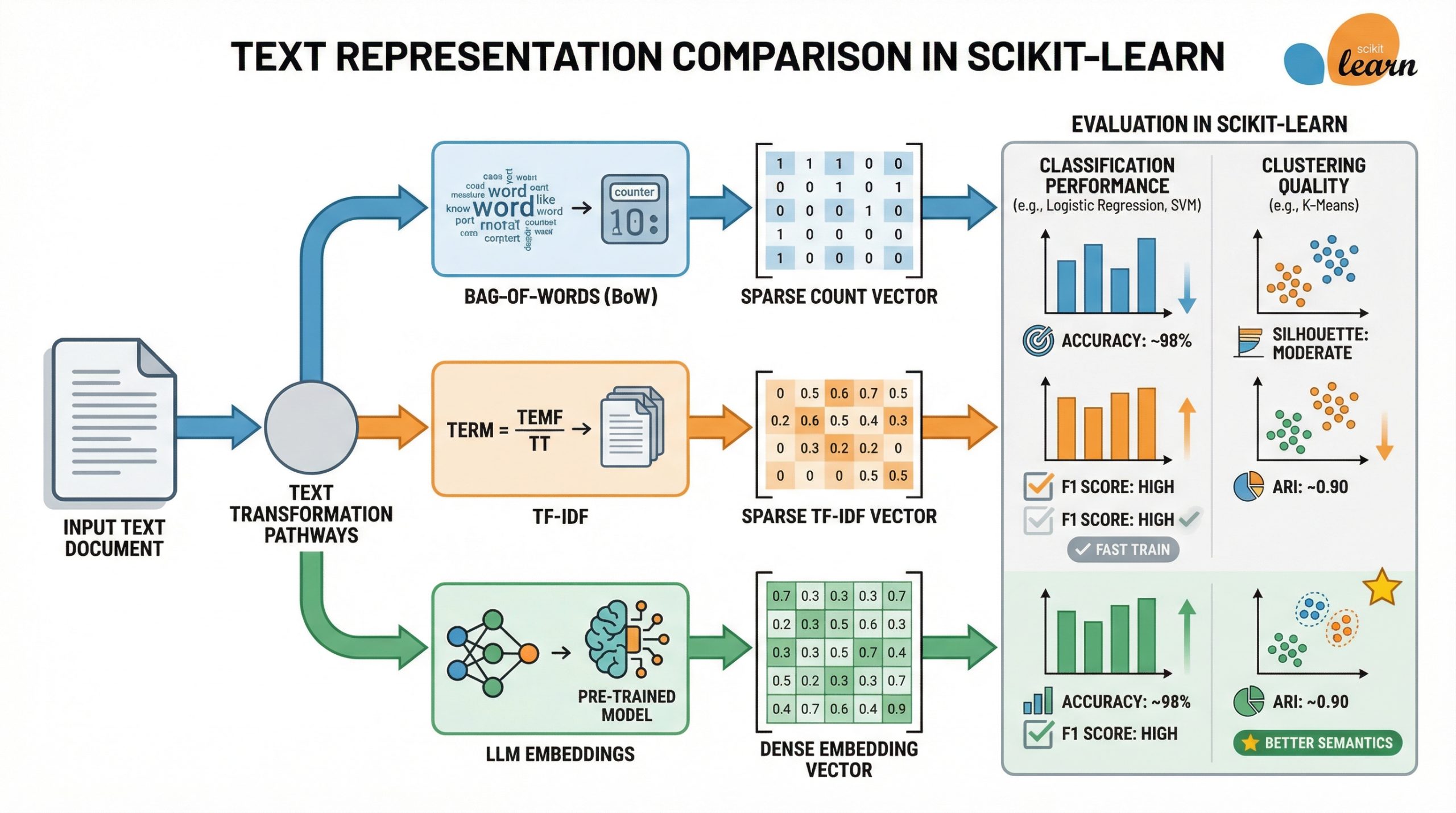

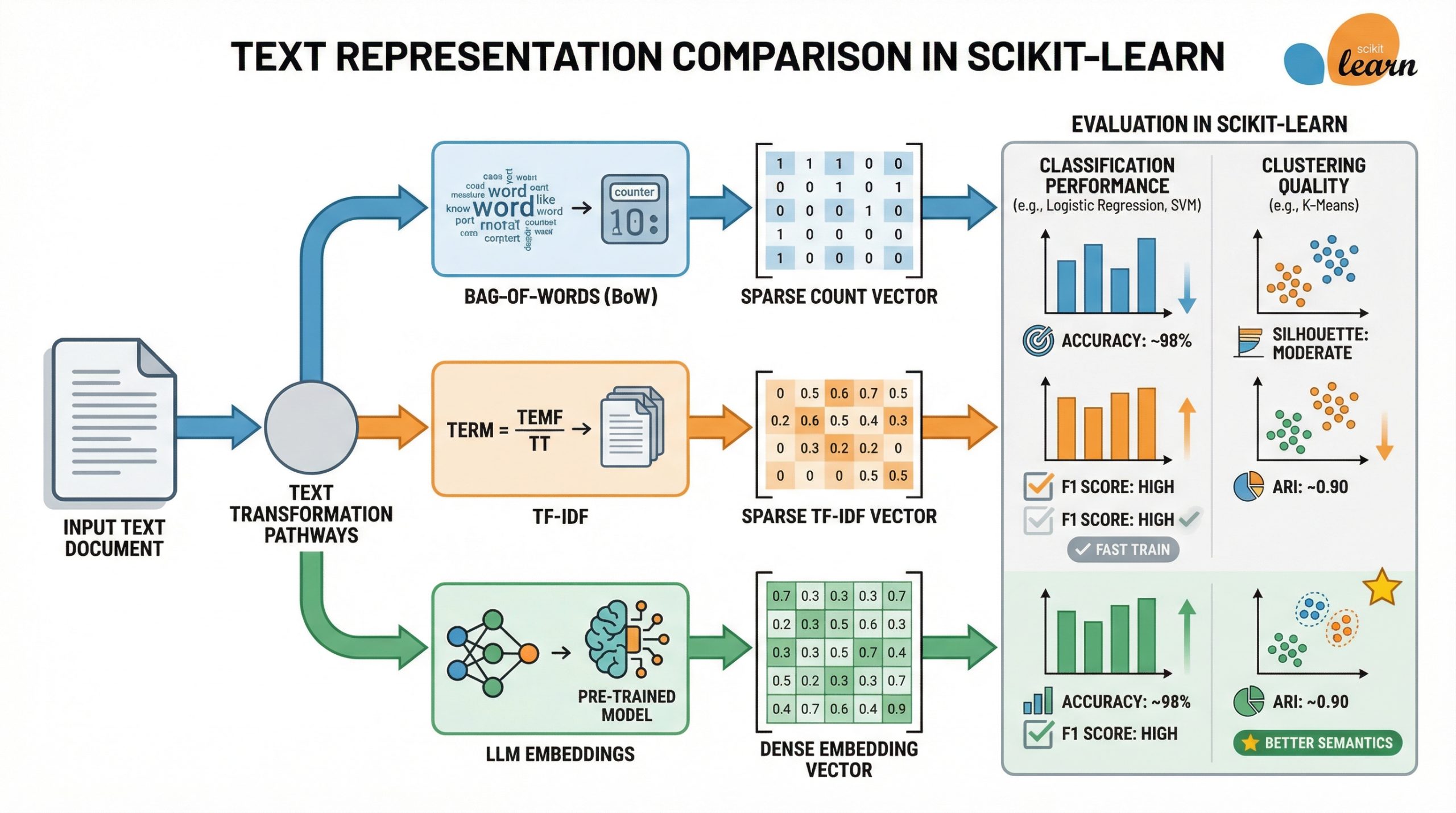

LLM Embeddings vs TF-IDF vs Bag-of-Words: Which Works Better in Scikit-learn?

Machine learning models built with frameworks like scikit-learn can accommodate unstructured data like text, as long as this raw text is converted into a numerical representation that is understandable by algorithms, models, and machines in a broader sense.

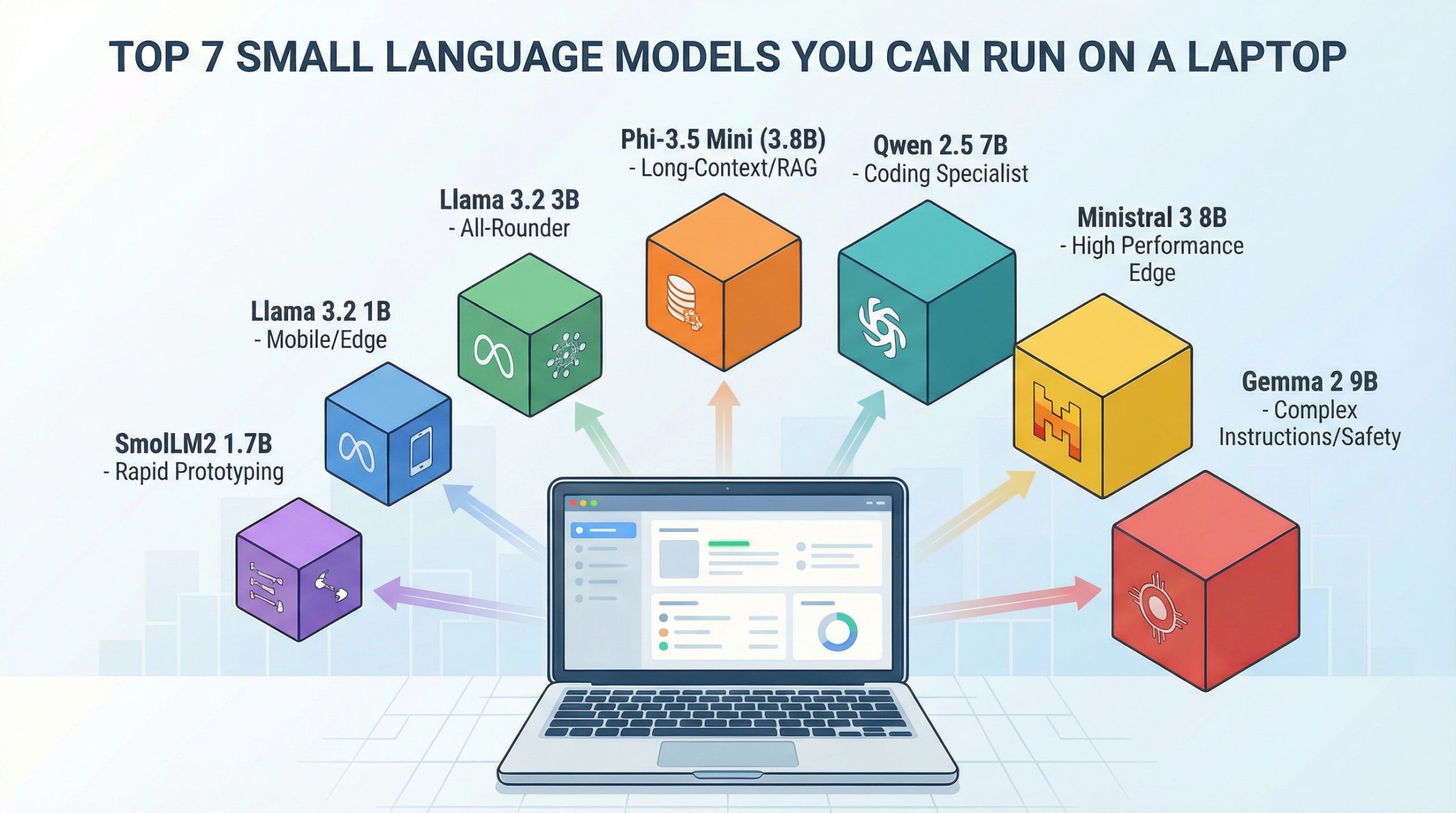

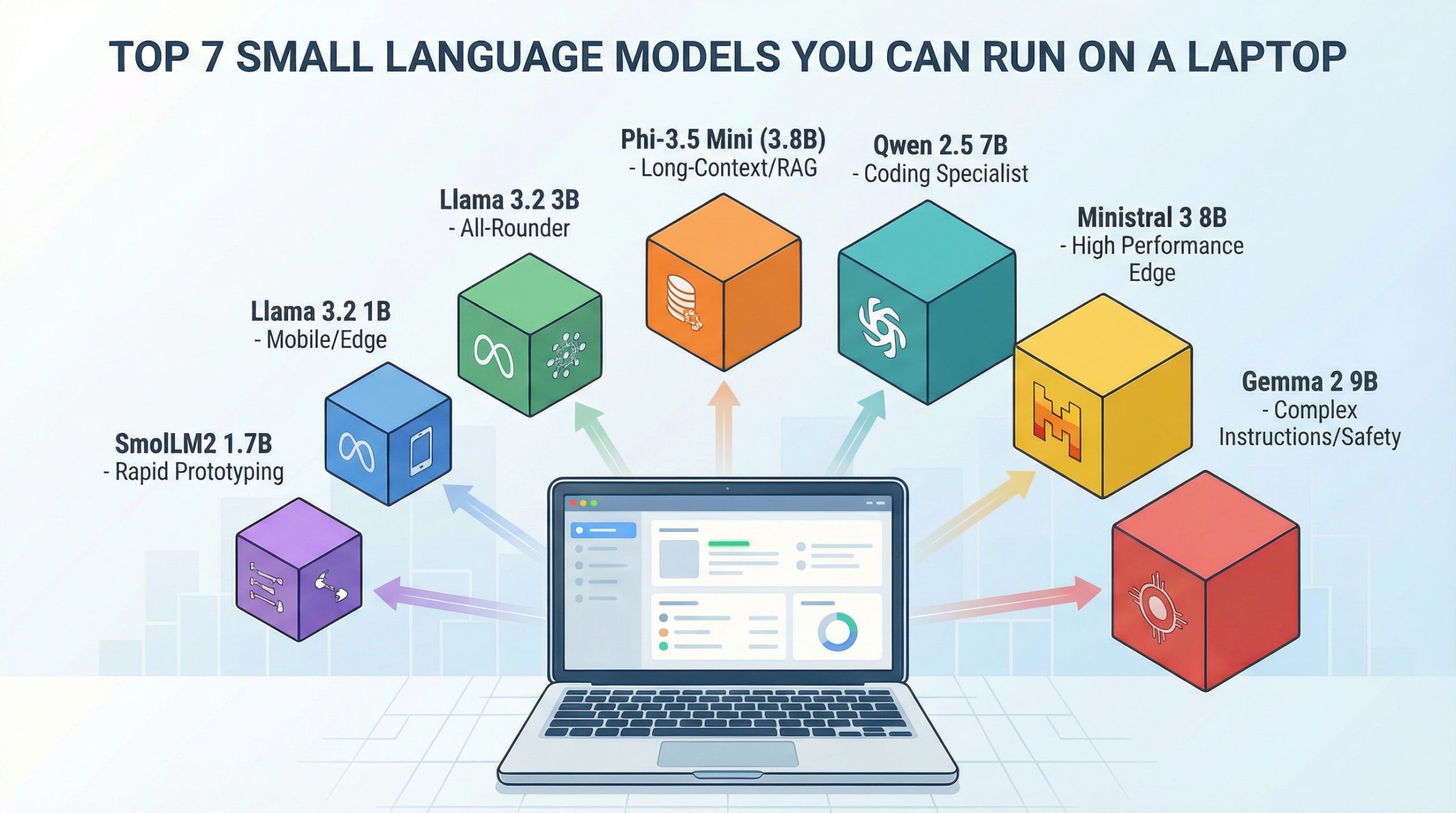

Top 7 Small Language Models You Can Run on a Laptop

Powerful AI now runs on consumer hardware.

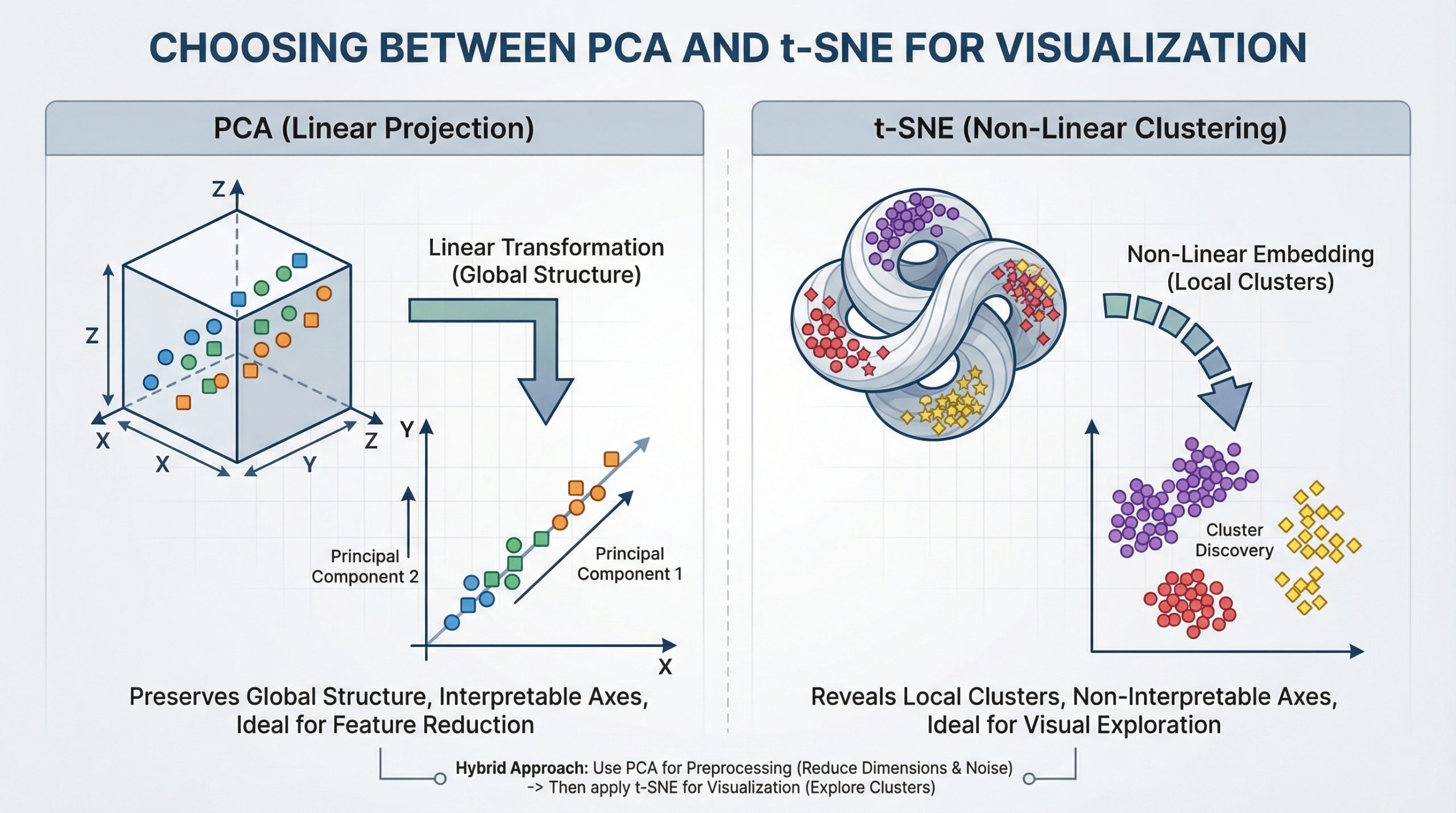

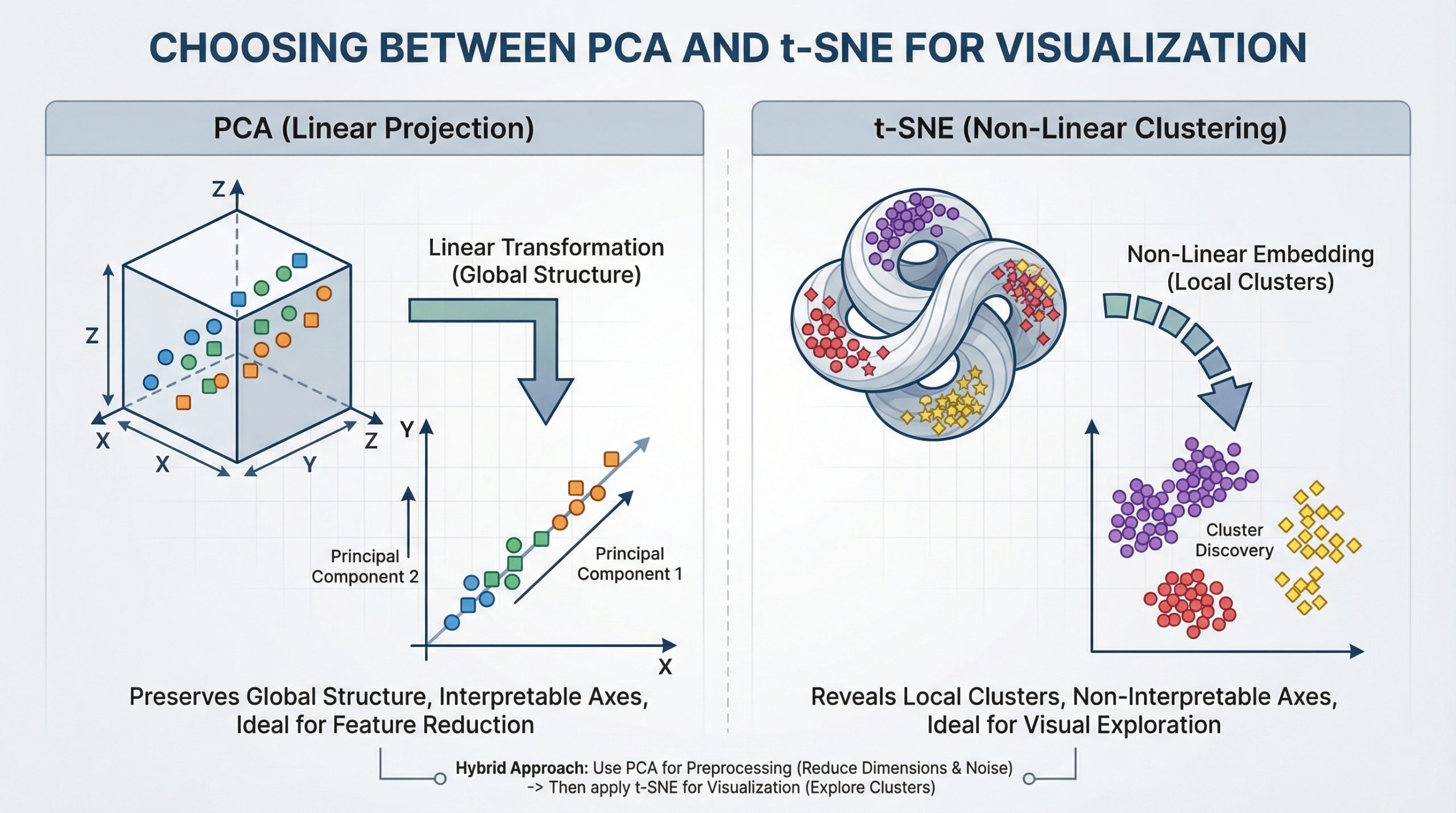

Choosing Between PCA and t-SNE for Visualization

For data scientists, working with high-dimensional data is part of daily life.

Export Your ML Model in ONNX Format

When building machine learning models, training is only half the journey.

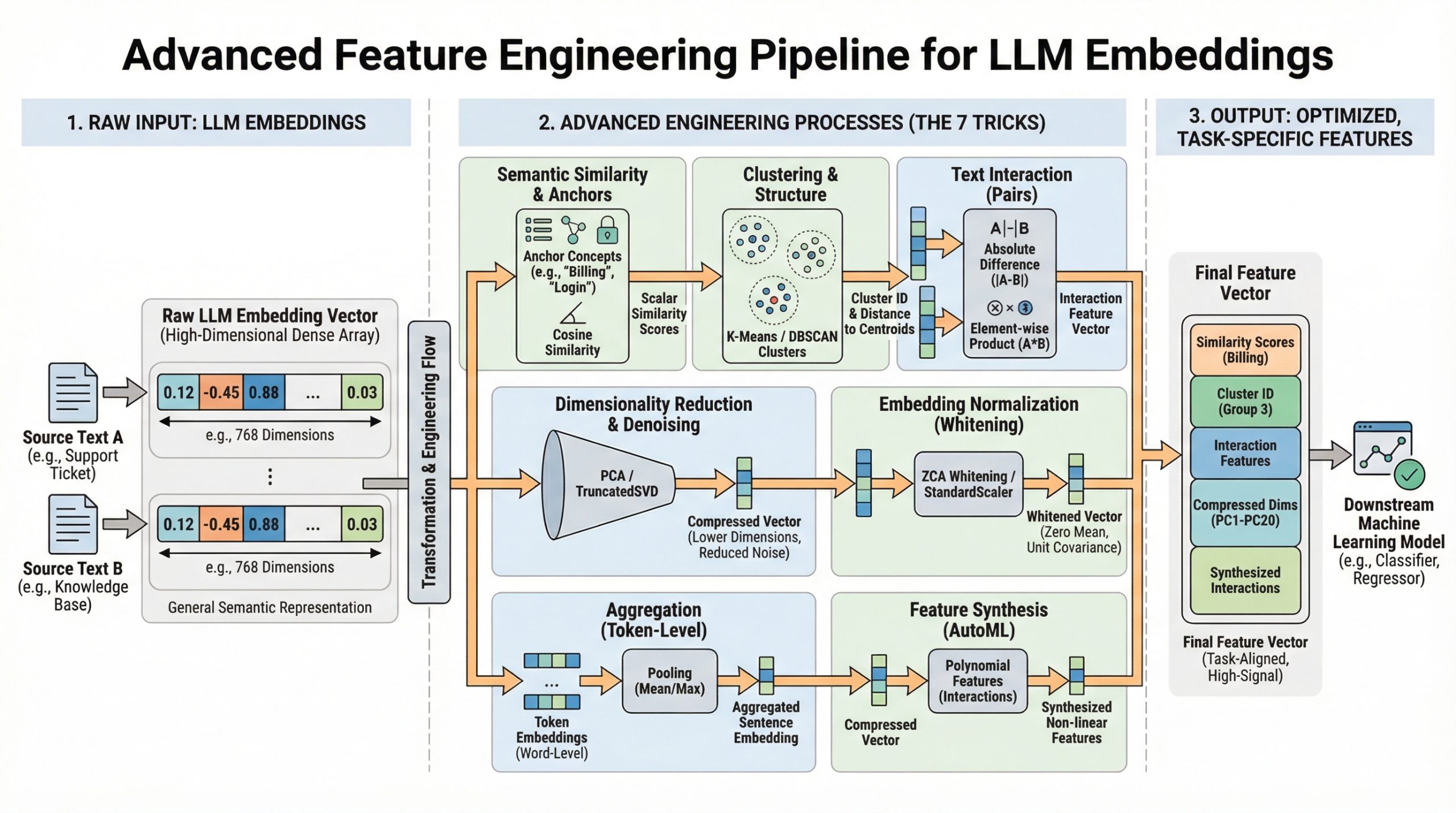

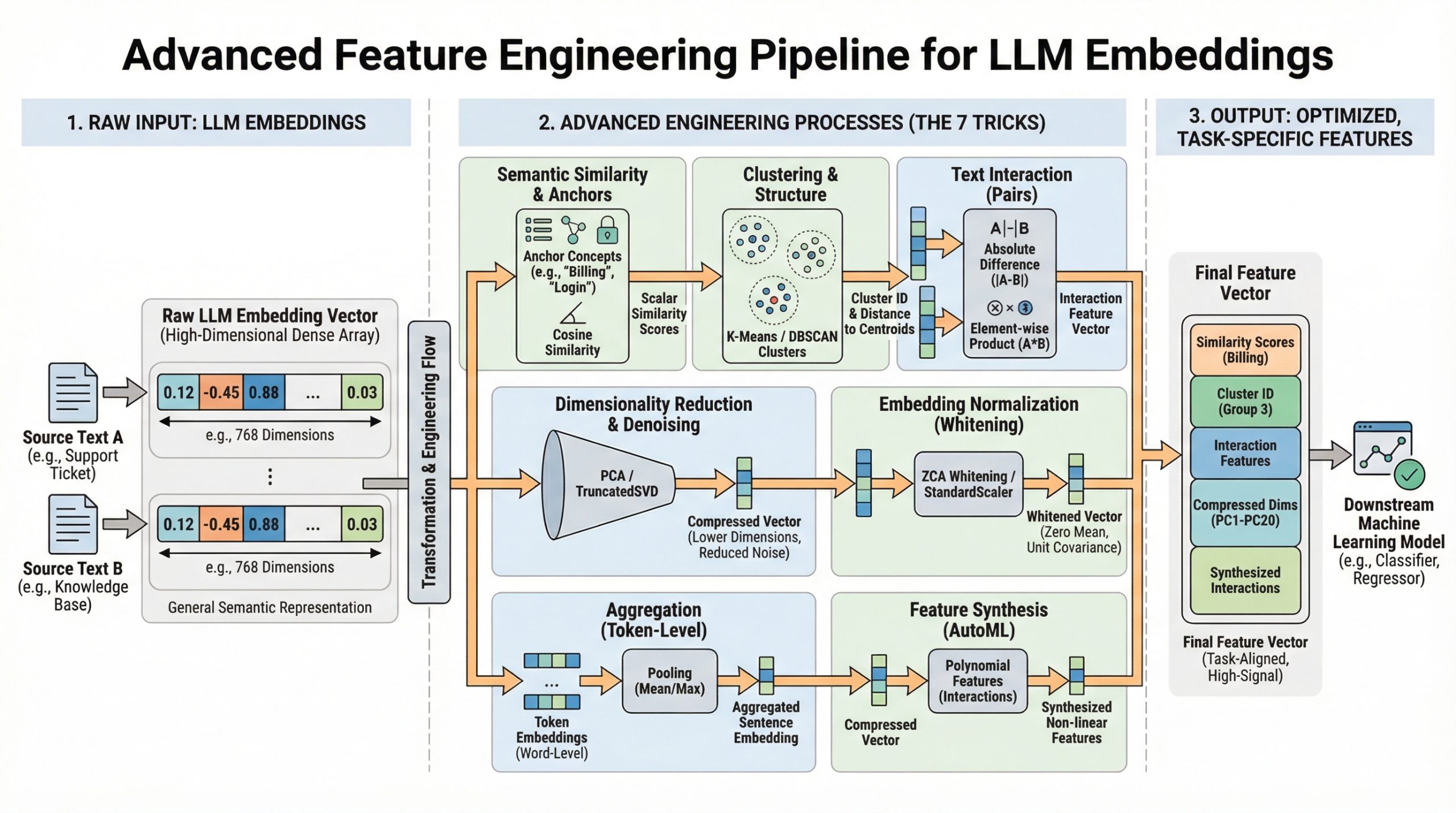

7 Advanced Feature Engineering Tricks Using LLM Embeddings

You have mastered model.

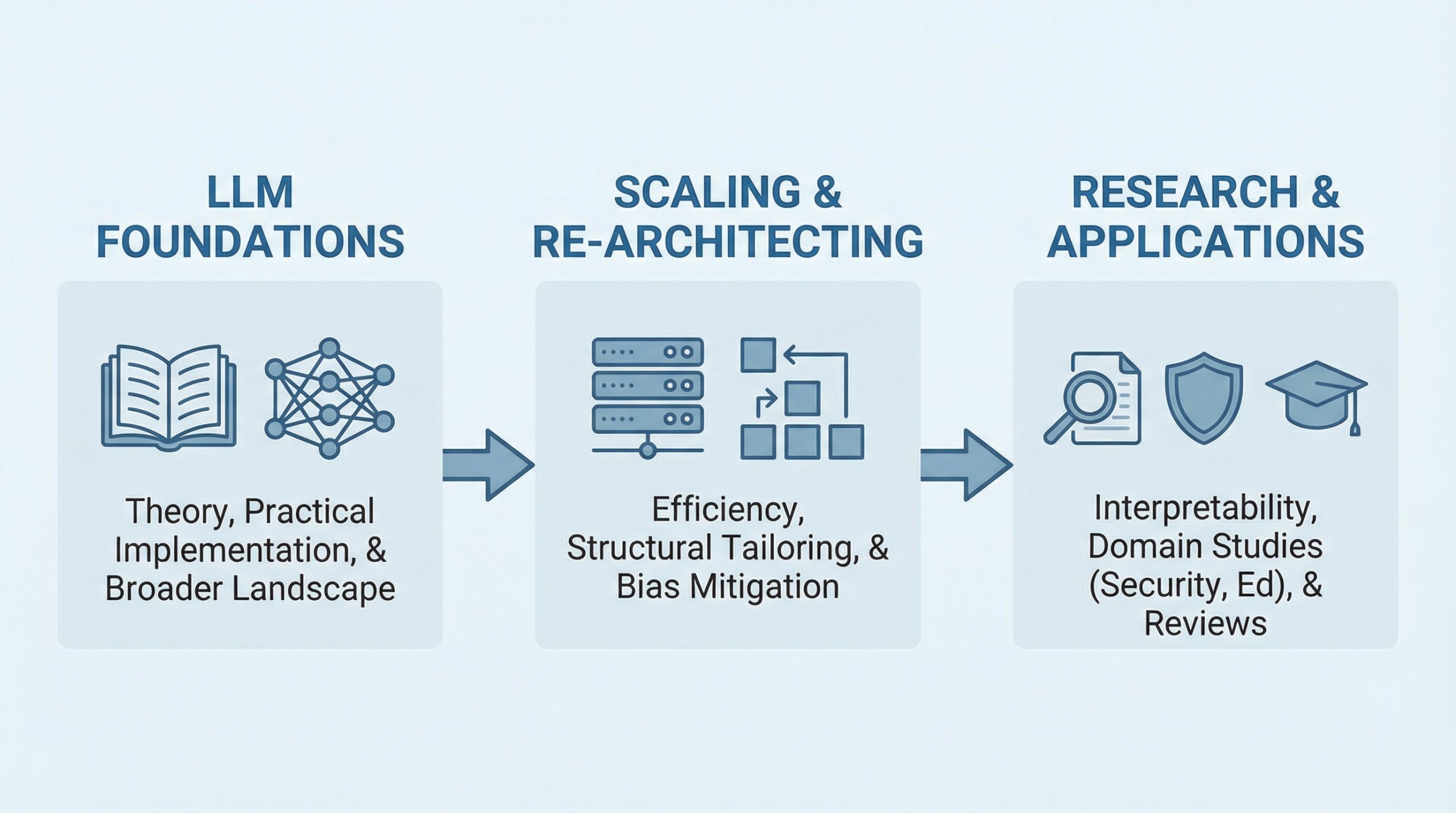

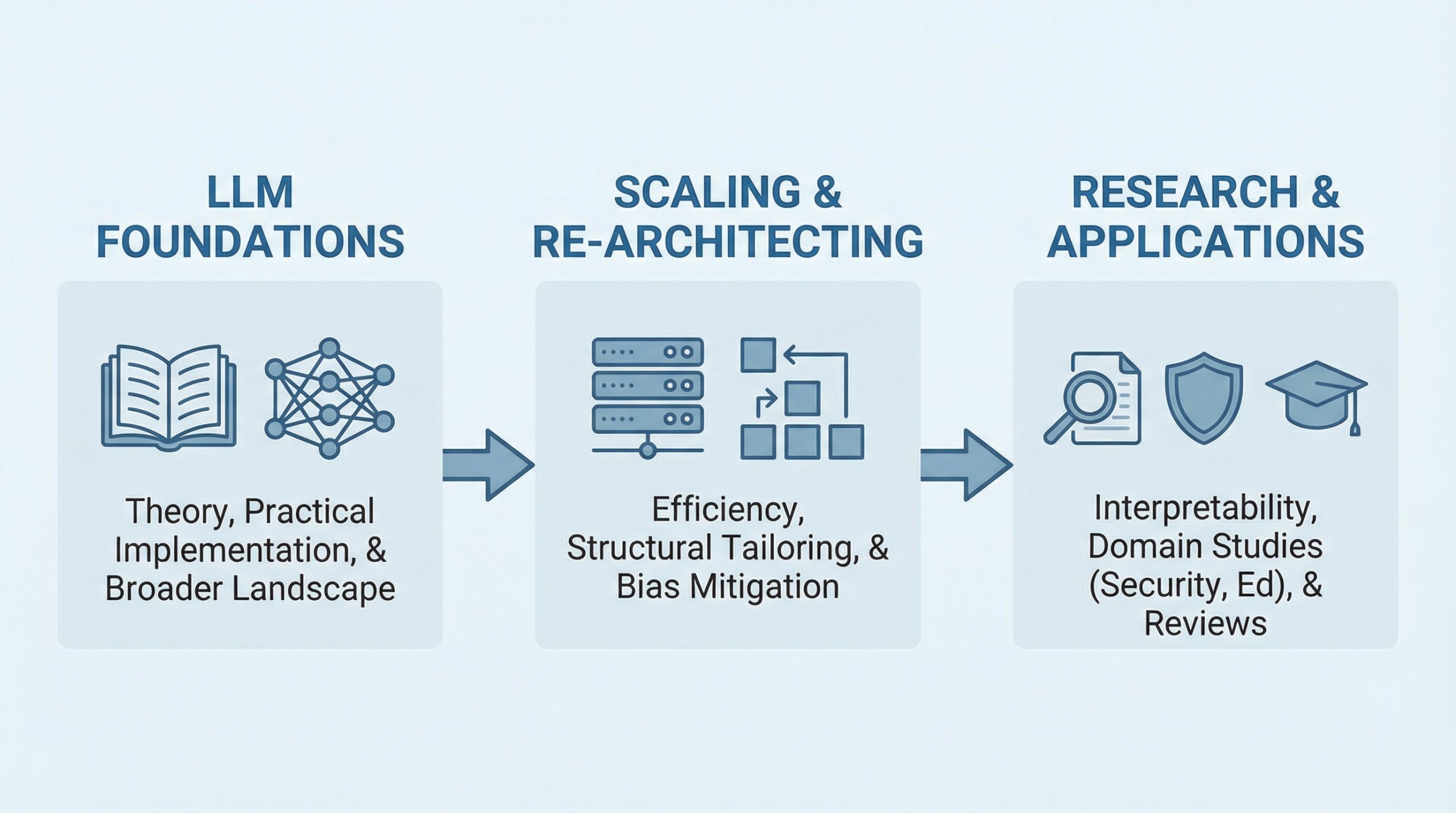

A Beginner’s Reading List for Large Language Models for 2026

The large language models (LLMs) hype wave shows no sign of fading anytime soon: after all, LLMs keep reinventing themselves at a rapid pace and transforming the industry as a whole.

7 Important Considerations Before Deploying Agentic AI in Production

The promise of agentic AI is compelling: autonomous systems that reason, plan, and execute complex tasks with minimal human intervention.

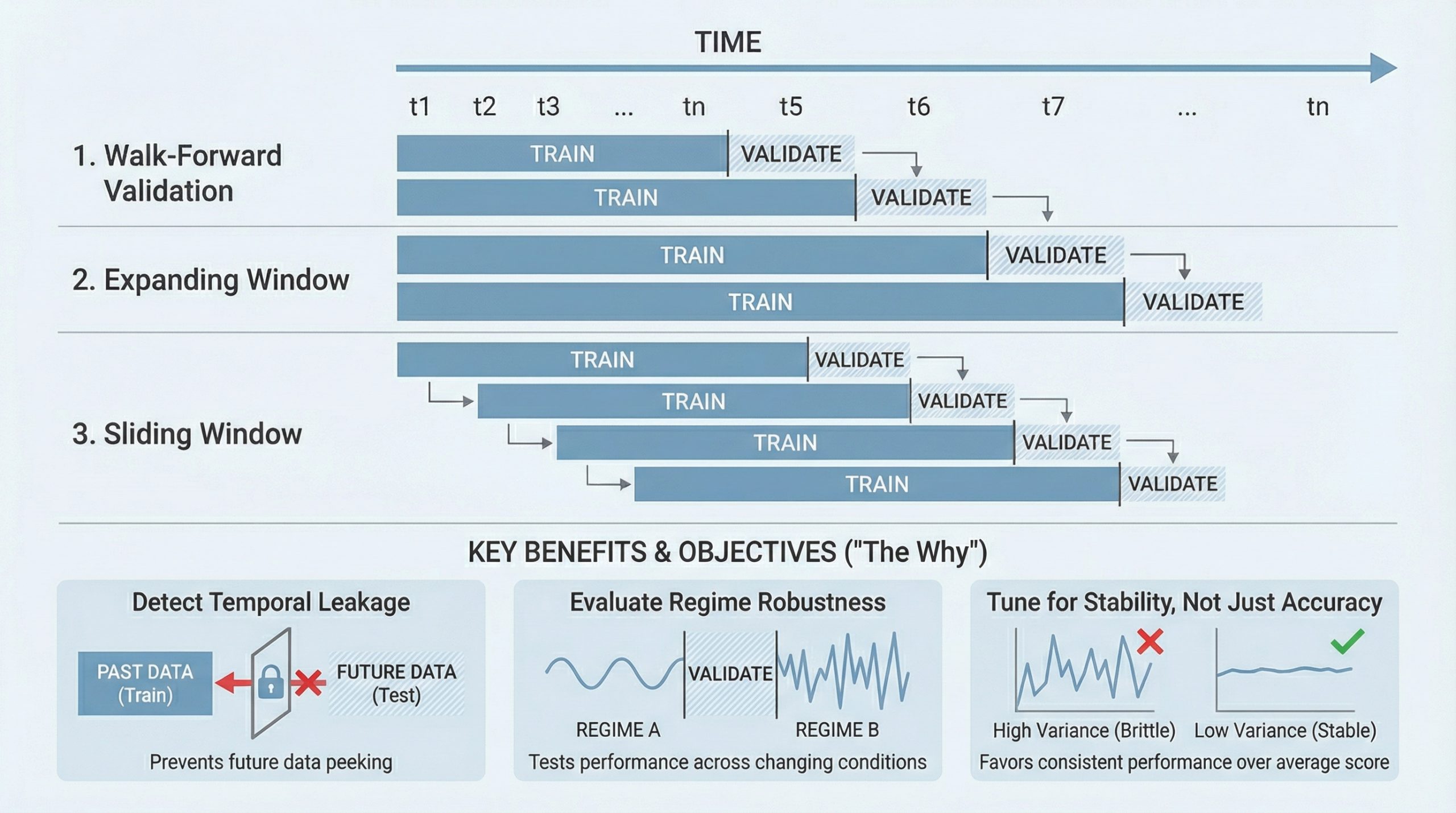

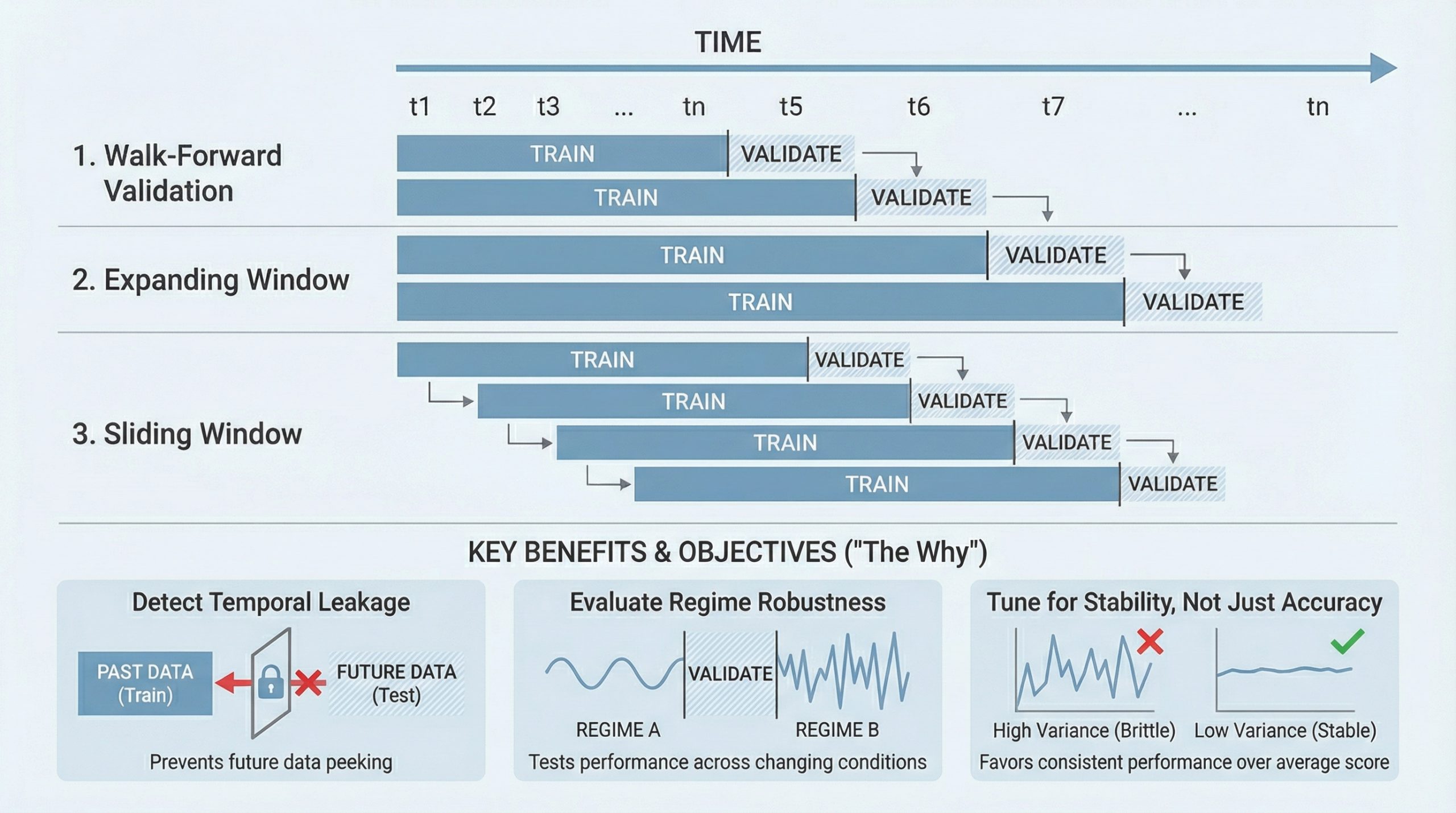

5 Ways to Use Cross-Validation to Improve Time Series Models

Time series modeling <a href="https://machinelearningmastery.

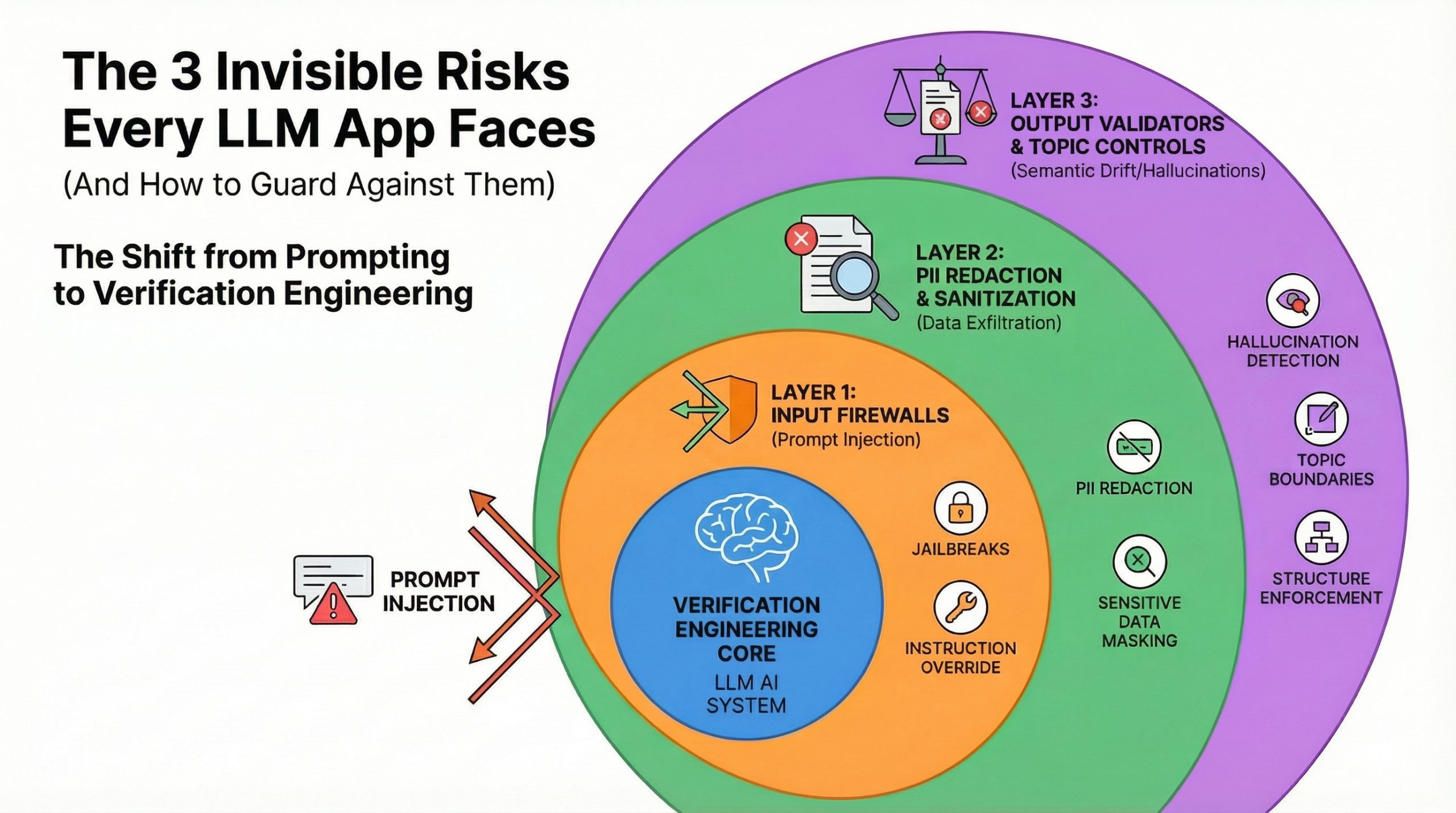

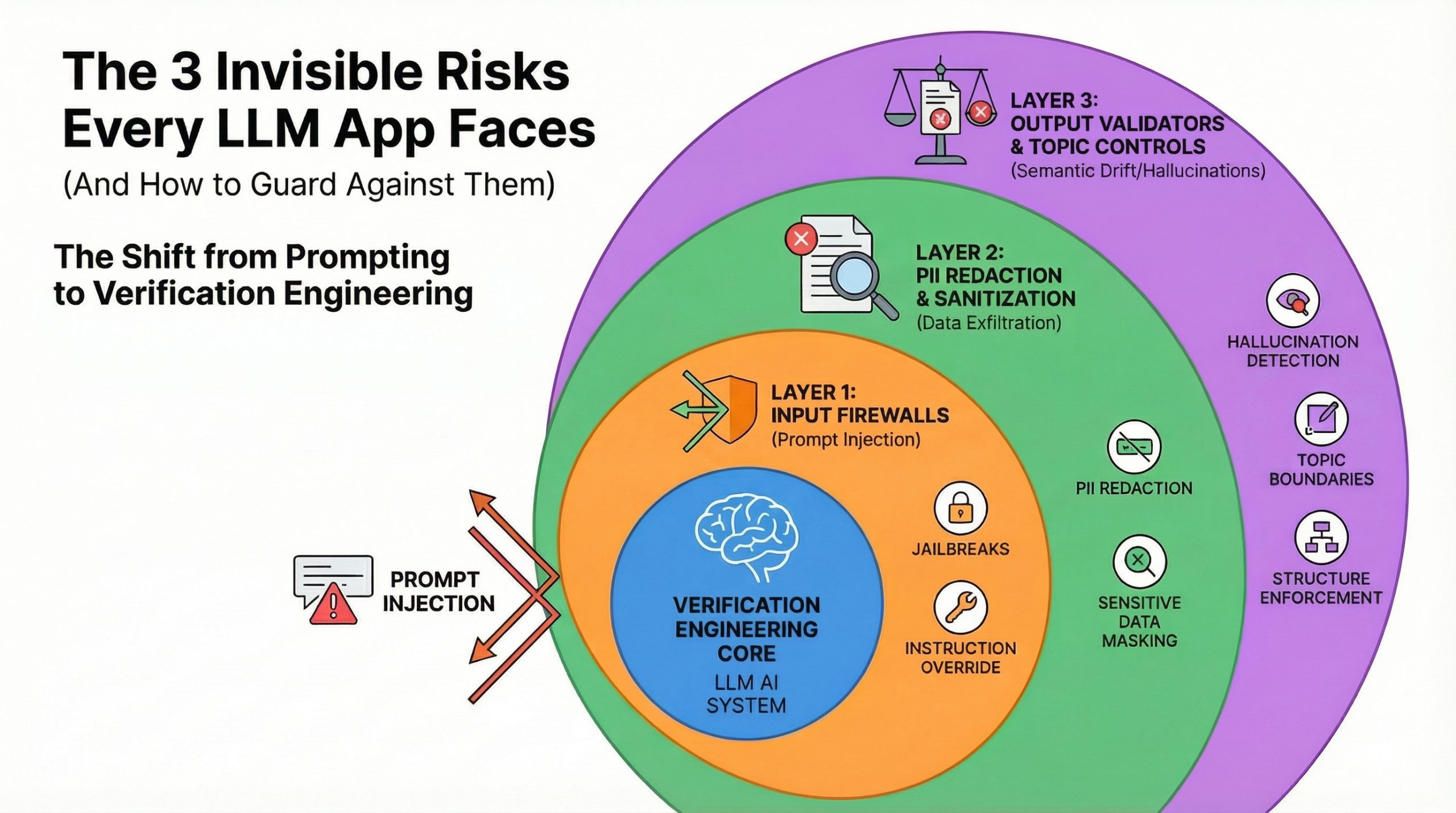

The 3 Invisible Risks Every LLM App Faces (And How to Guard Against Them)

Building a chatbot prototype takes hours.

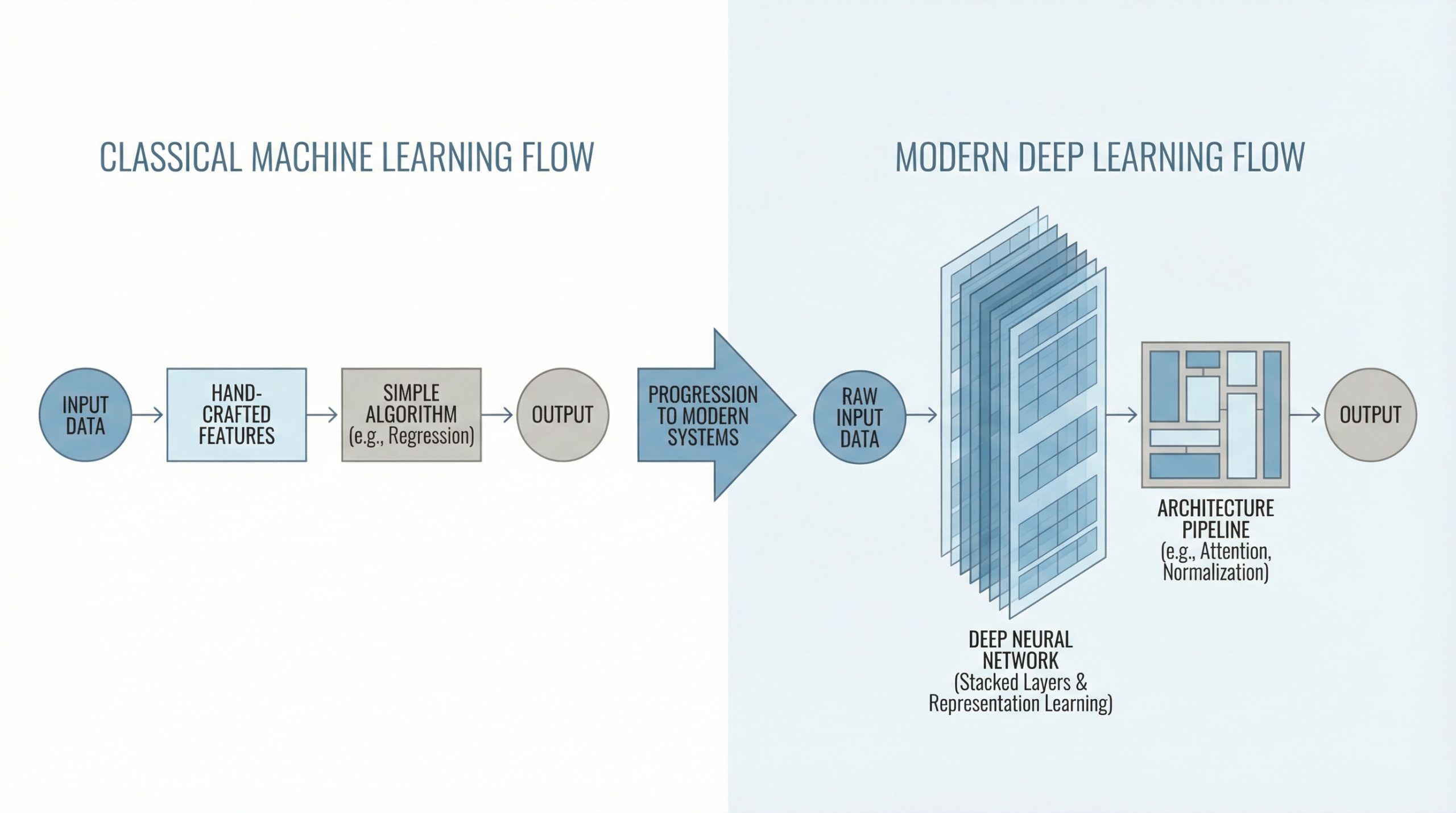

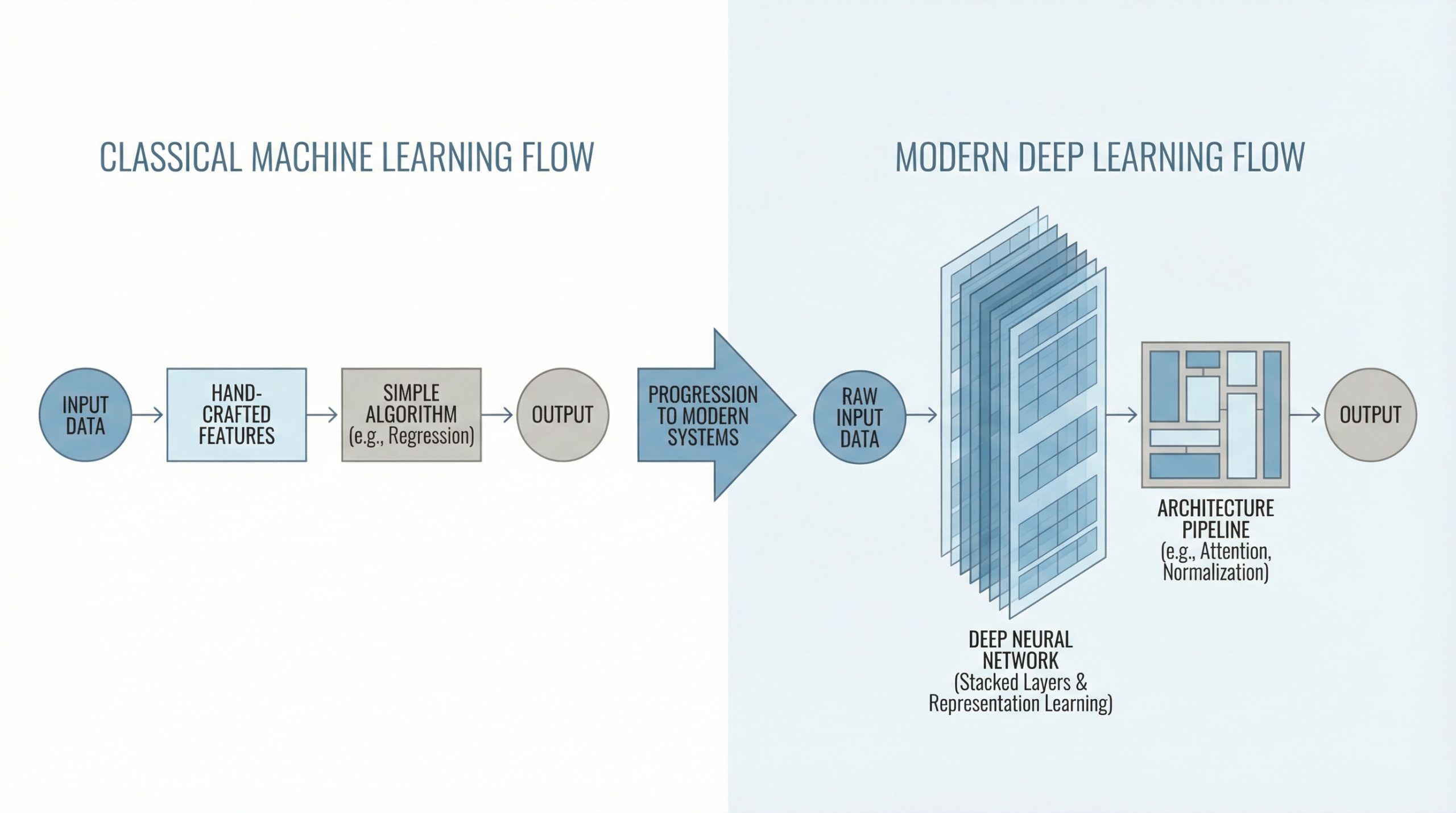

Leveling Up Your Machine Learning: What To Do After Andrew Ng’s Course

Finishing Andrew Ng's machine learning course <a href="https://www.

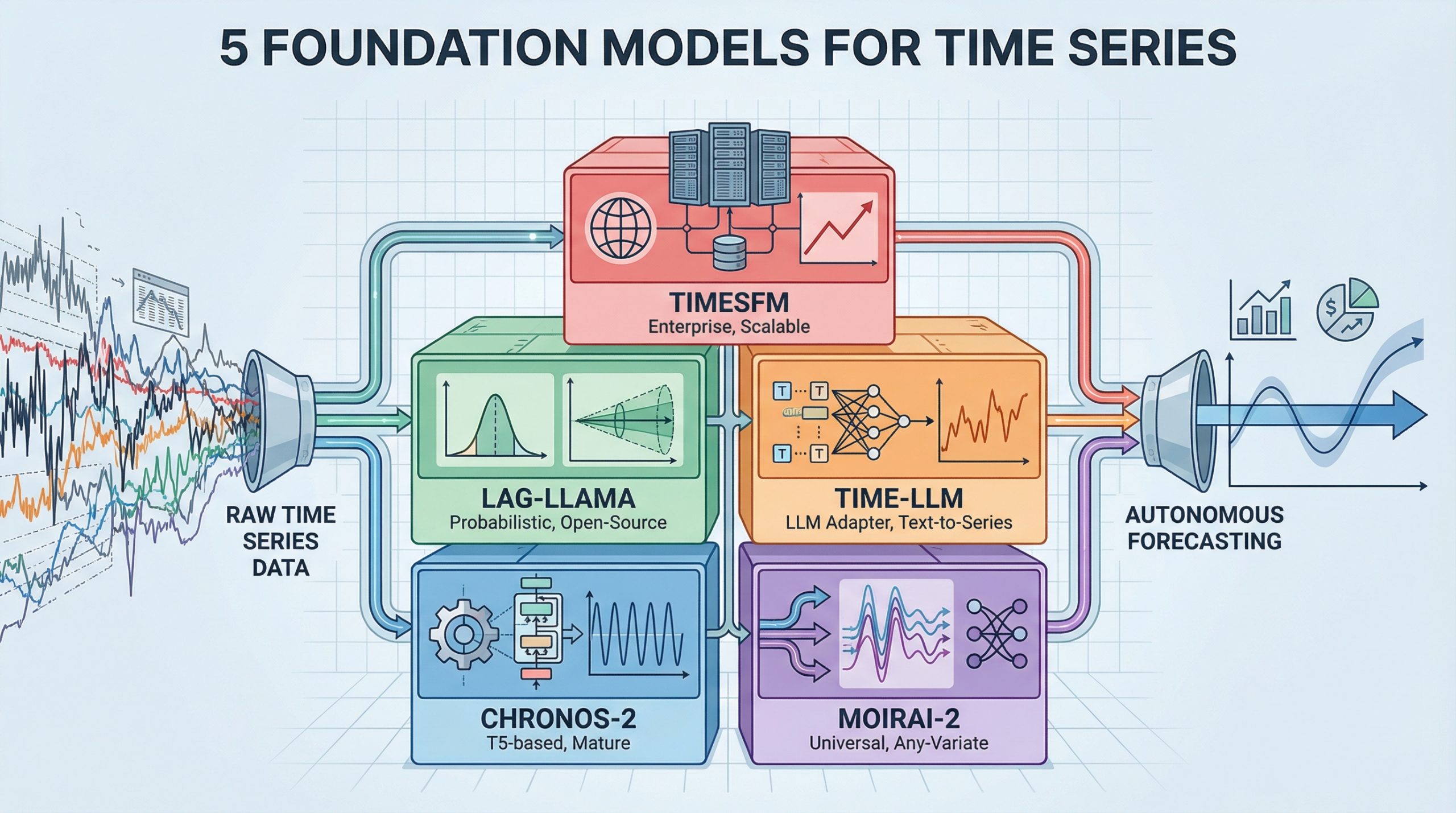

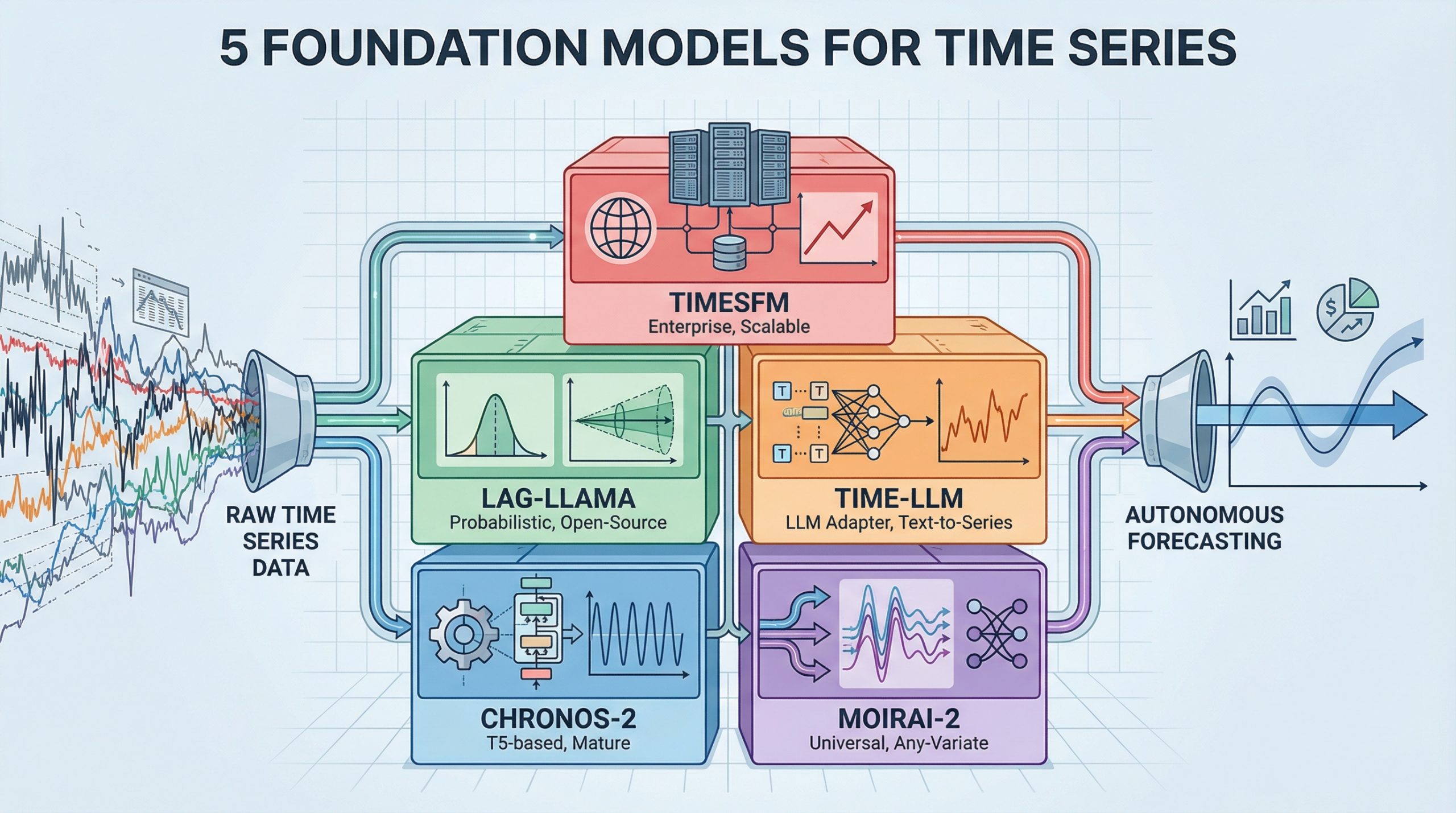

The 2026 Time Series Toolkit: 5 Foundation Models for Autonomous Forecasting

Most forecasting work involves building custom models for each dataset — fit an ARIMA here, tune an LSTM there, wrestle with <a href="https://facebook.

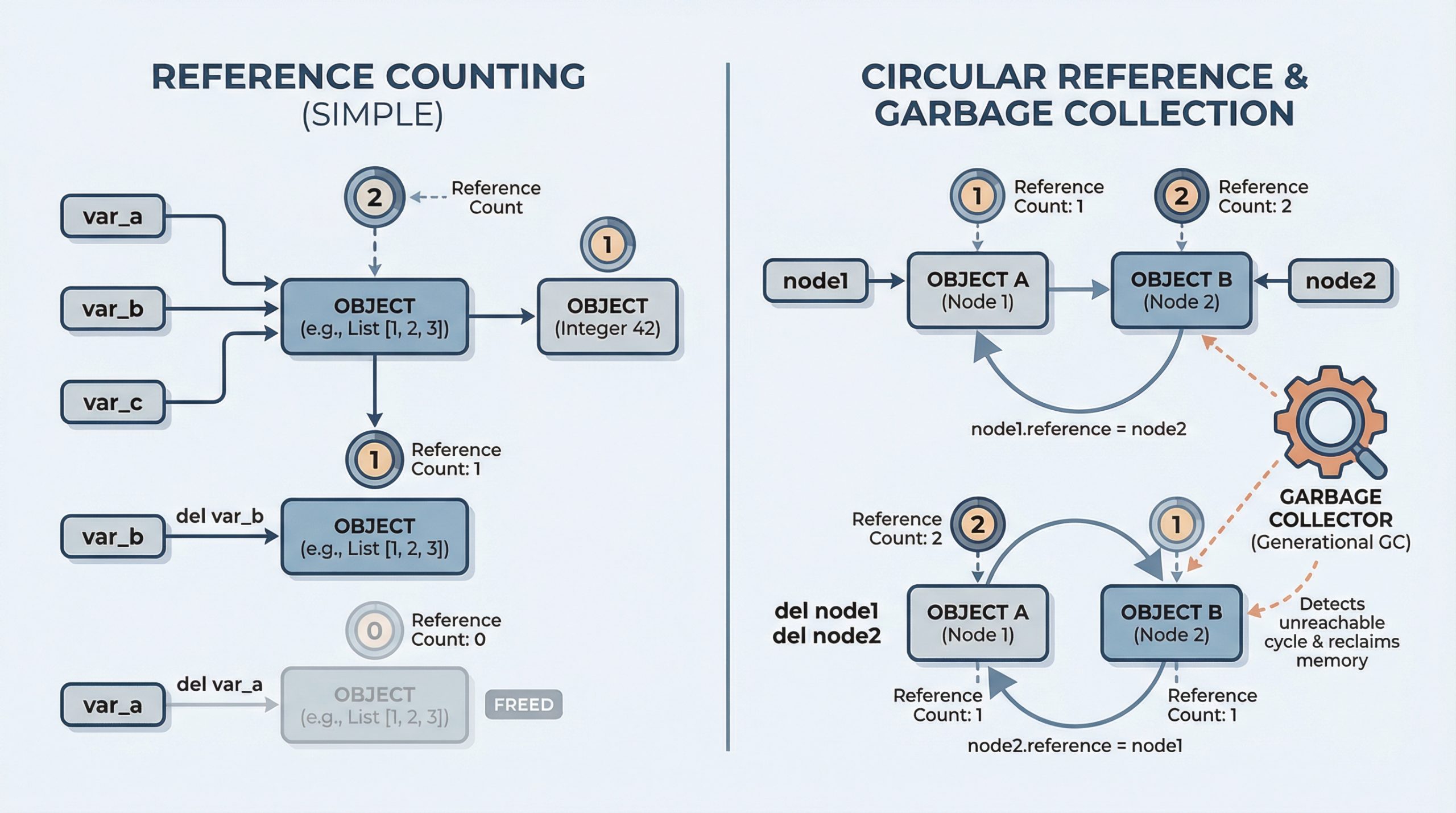

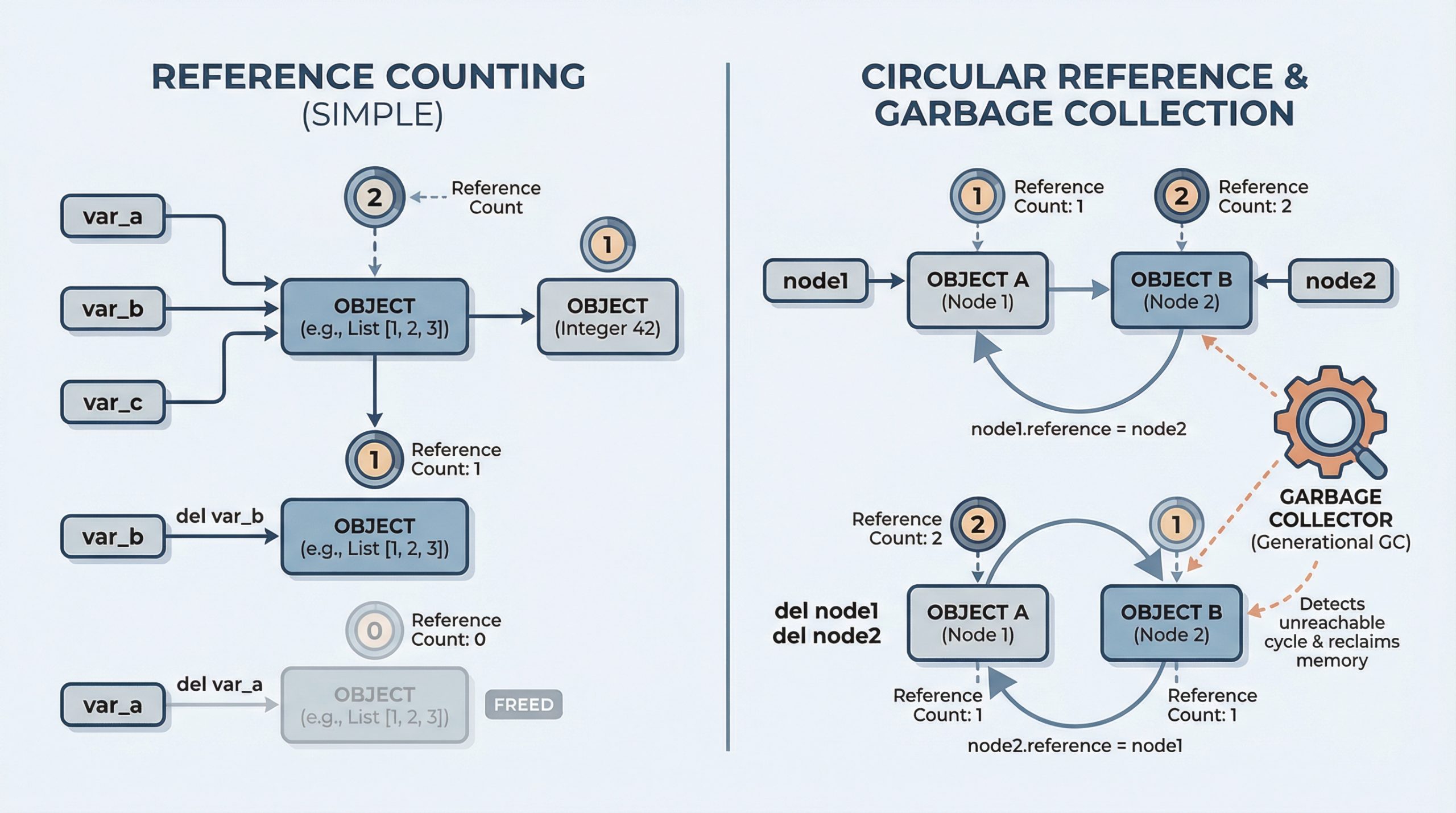

Everything You Need to Know About How Python Manages Memory

In languages like C, you manually allocate and free memory.

The Machine Learning Practitioner’s Guide to Model Deployment with FastAPI

If you’ve trained a machine learning model, a common question comes up: “How do we actually use it?” This is where many machine learning practitioners get stuck.