Export Your ML Model in ONNX Format

When building machine learning models, training is only half the journey.

When building machine learning models, training is only half the journey.

You have mastered model.

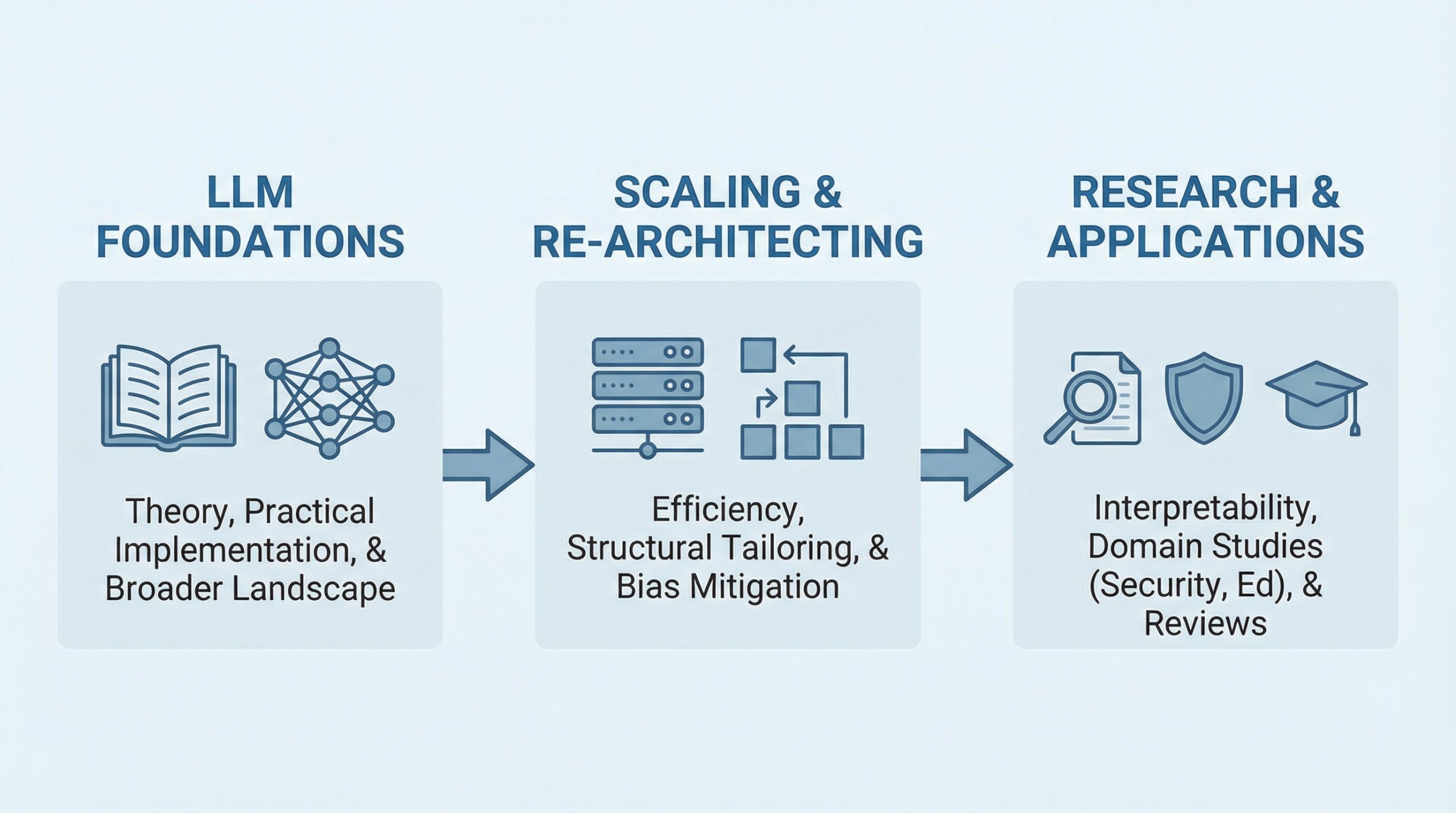

The large language models (LLMs) hype wave shows no sign of fading anytime soon: after all, LLMs keep reinventing themselves at a rapid pace and transforming the industry as a whole.

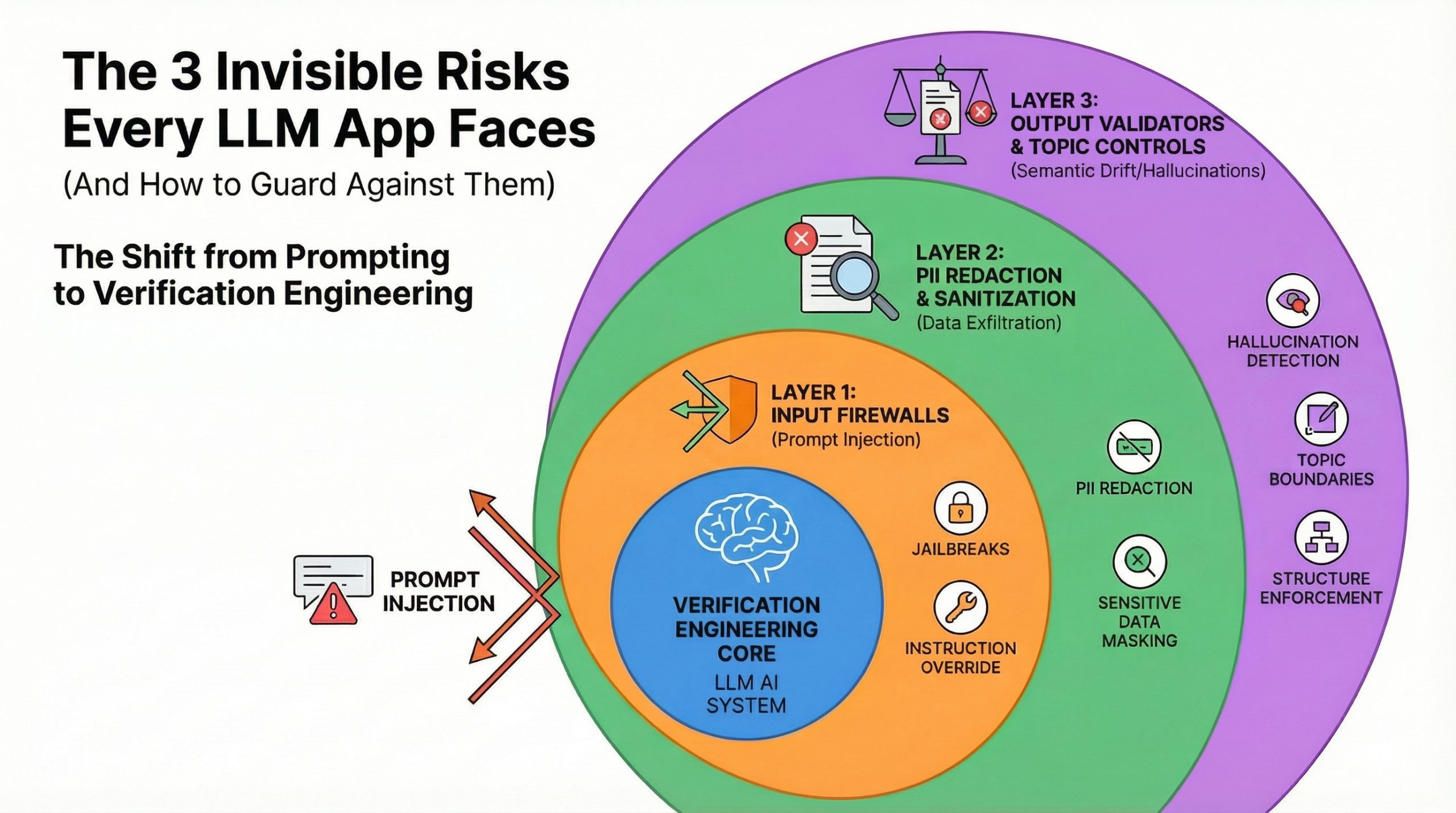

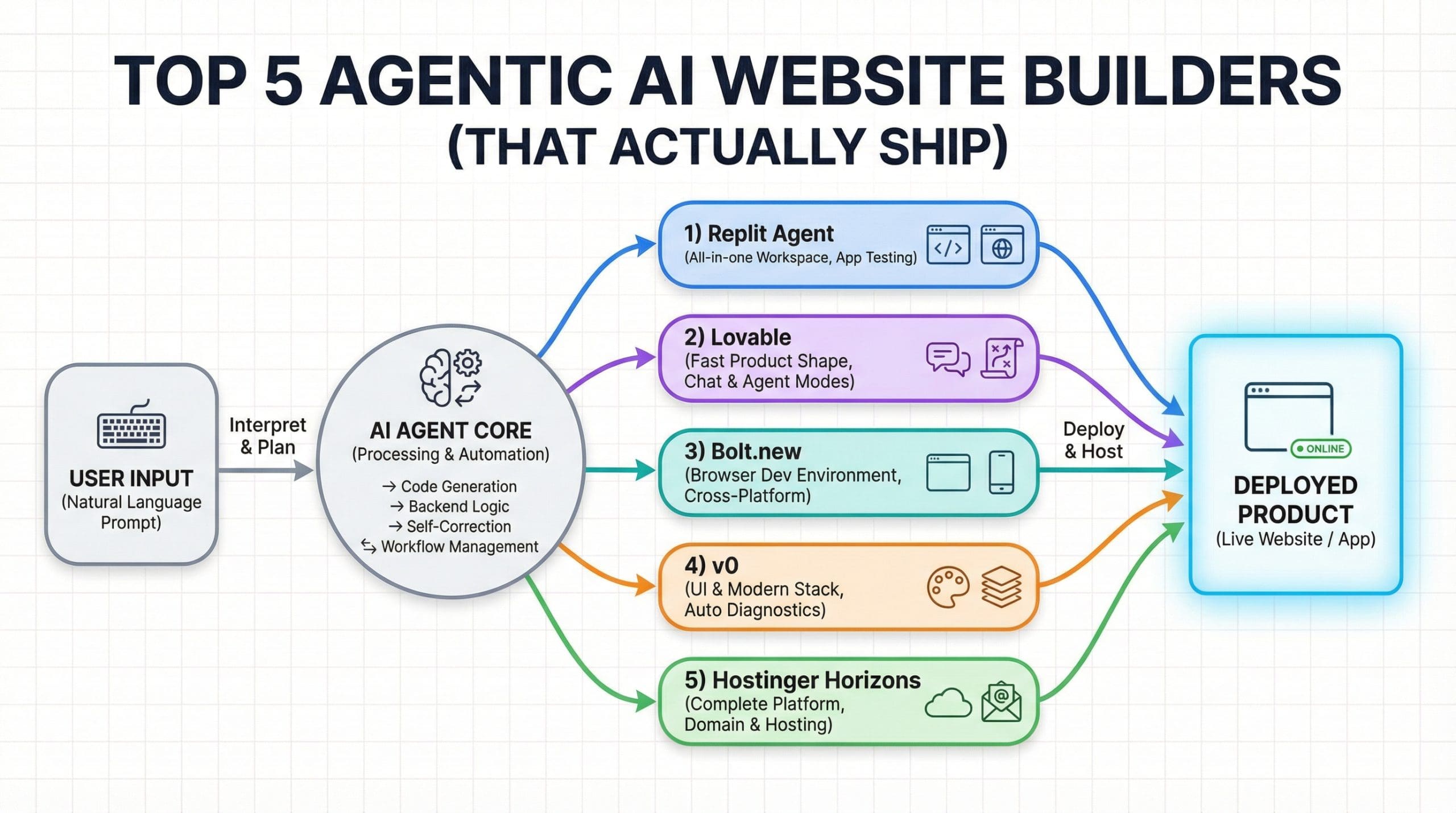

The promise of agentic AI is compelling: autonomous systems that reason, plan, and execute complex tasks with minimal human intervention.

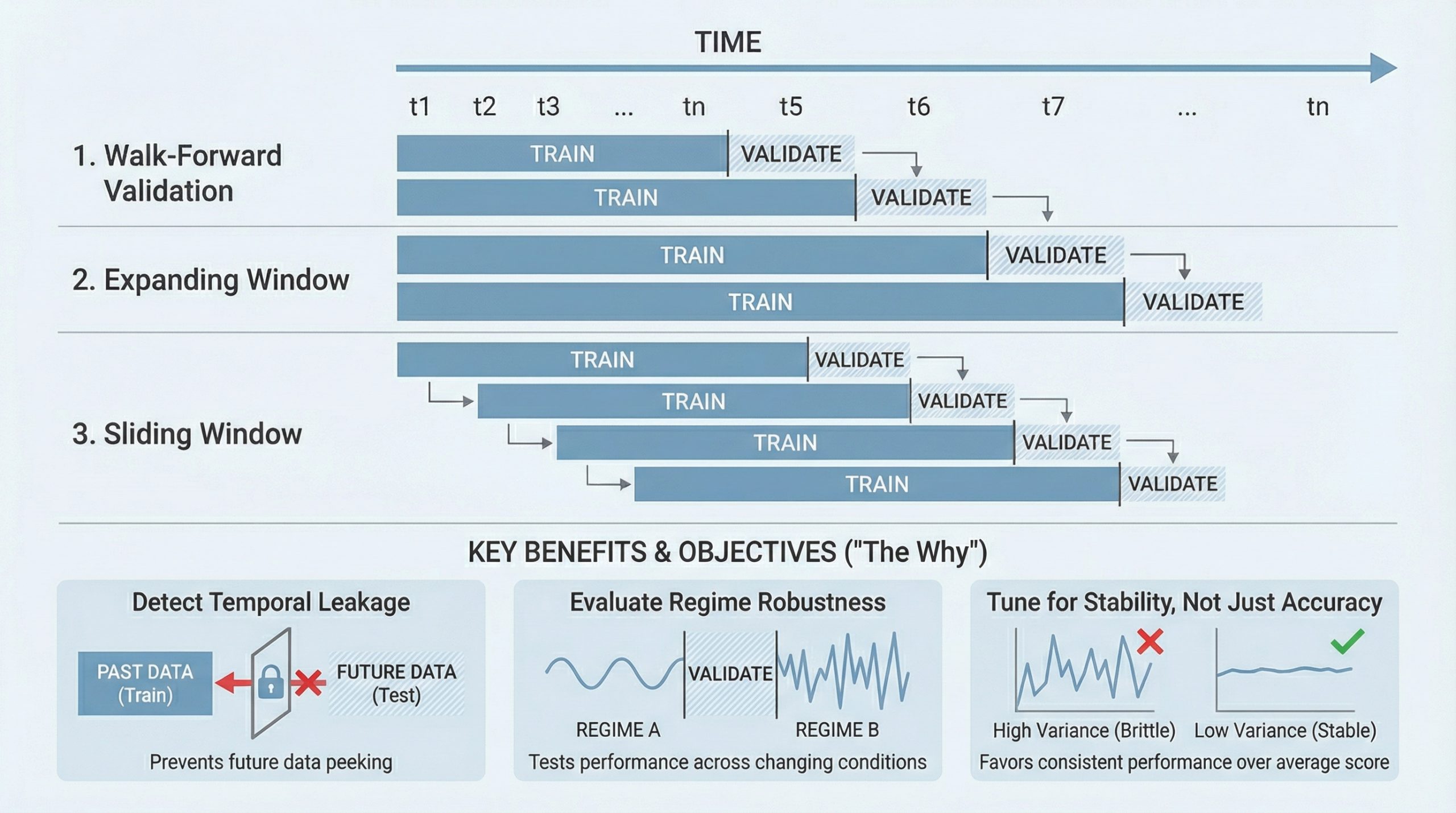

Time series modeling <a href="https://machinelearningmastery.

Building a chatbot prototype takes hours.

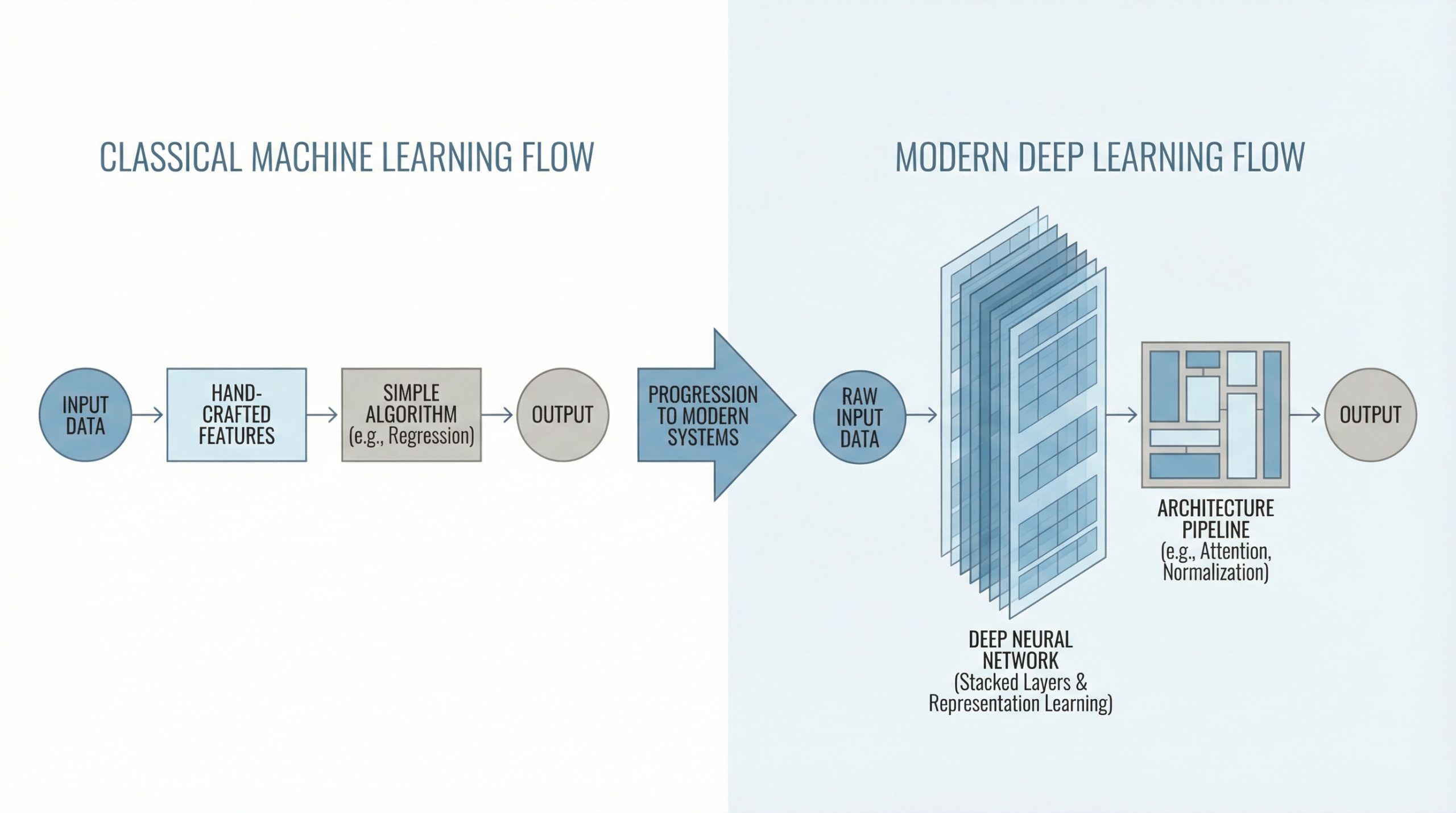

Finishing Andrew Ng's machine learning course <a href="https://www.

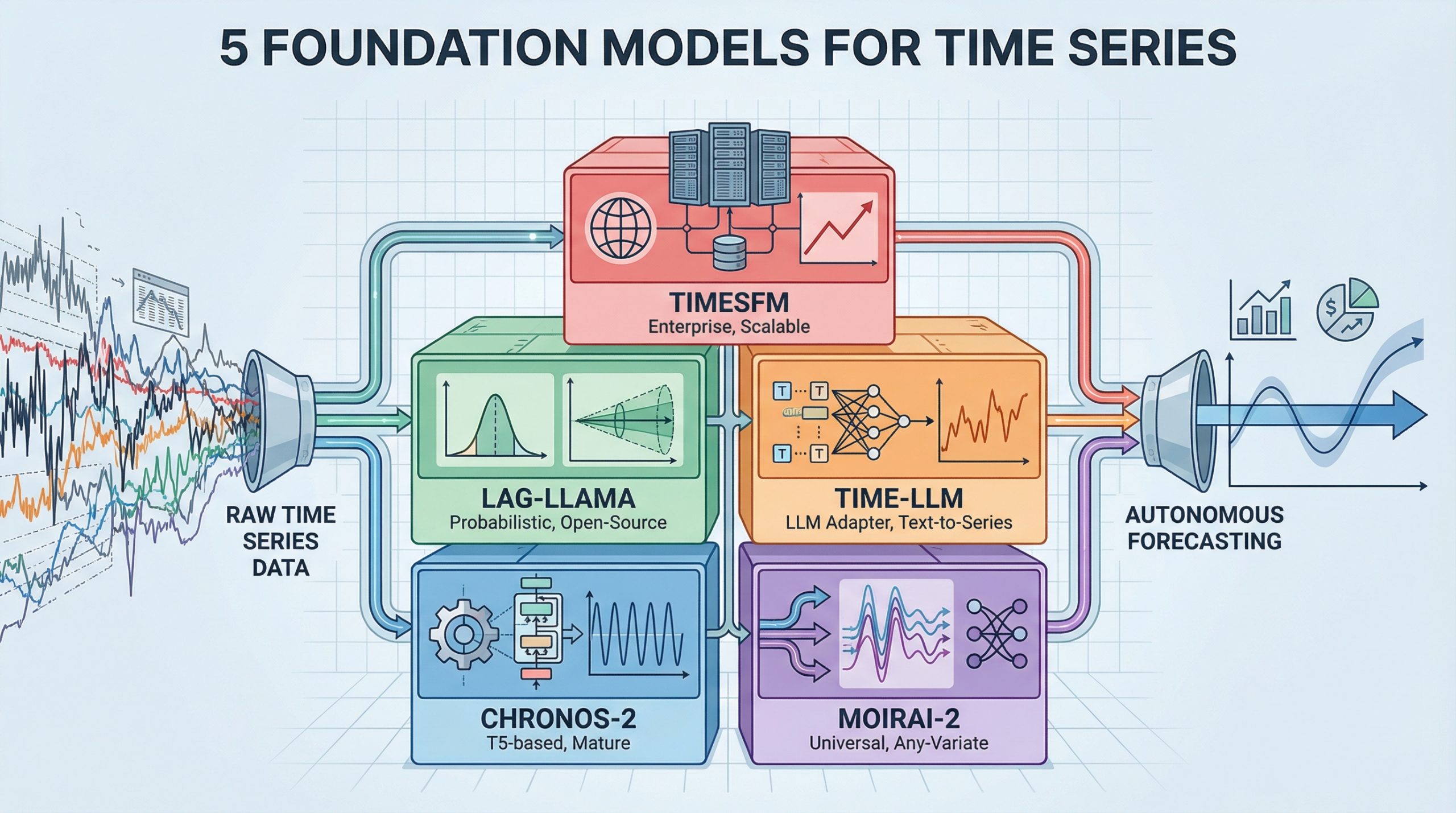

Most forecasting work involves building custom models for each dataset — fit an ARIMA here, tune an LSTM there, wrestle with <a href="https://facebook.

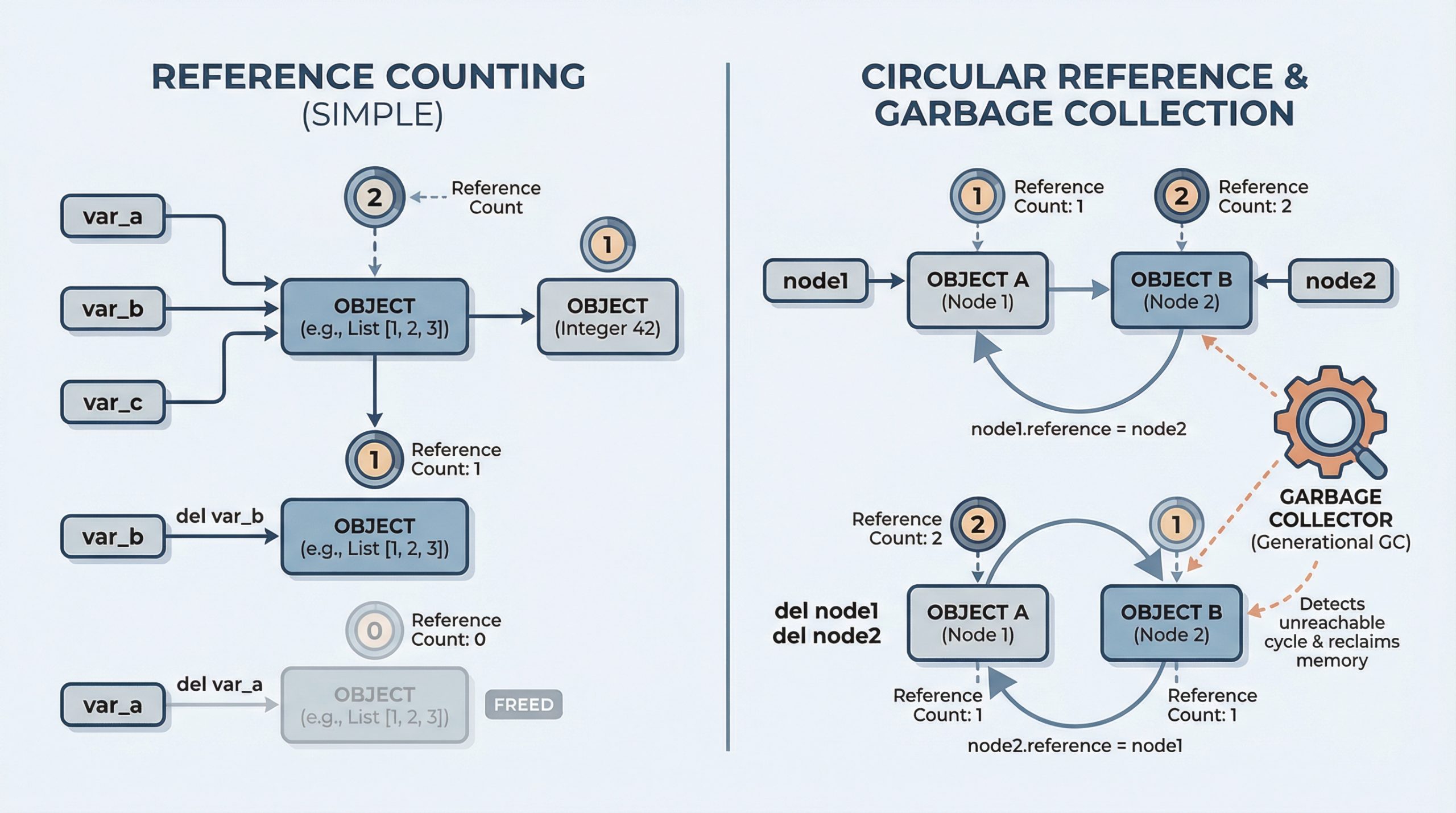

In languages like C, you manually allocate and free memory.

If you’ve trained a machine learning model, a common question comes up: “How do we actually use it?” This is where many machine learning practitioners get stuck.

I have been building a payment platform using vibe coding, and I do not have a frontend background.

Suppose you’ve built your machine learning model, run the experiments, and stared at the results wondering what went wrong.

Computer vision is an area of artificial intelligence that gives computer systems the ability to analyze, interpret, and understand visual data, namely images and videos.

This article is divided into five parts; they are: • An Example of Tensor Parallelism • Setting Up Tensor Parallelism • Preparing Model for Tensor Parallelism • Train a Model with Tensor Parallelism • Combining Tensor Parallelism with FSDP Tensor parallelism originated from the Megatron-LM paper.

This article is divided into five parts; they are: • Introduction to Fully Sharded Data Parallel • Preparing Model for FSDP Training • Training Loop with FSDP • Fine-Tuning FSDP Behavior • Checkpointing FSDP Models Sharding is a term originally used in database management systems, where it refers to dividing a database into smaller units, called shards, to improve performance.

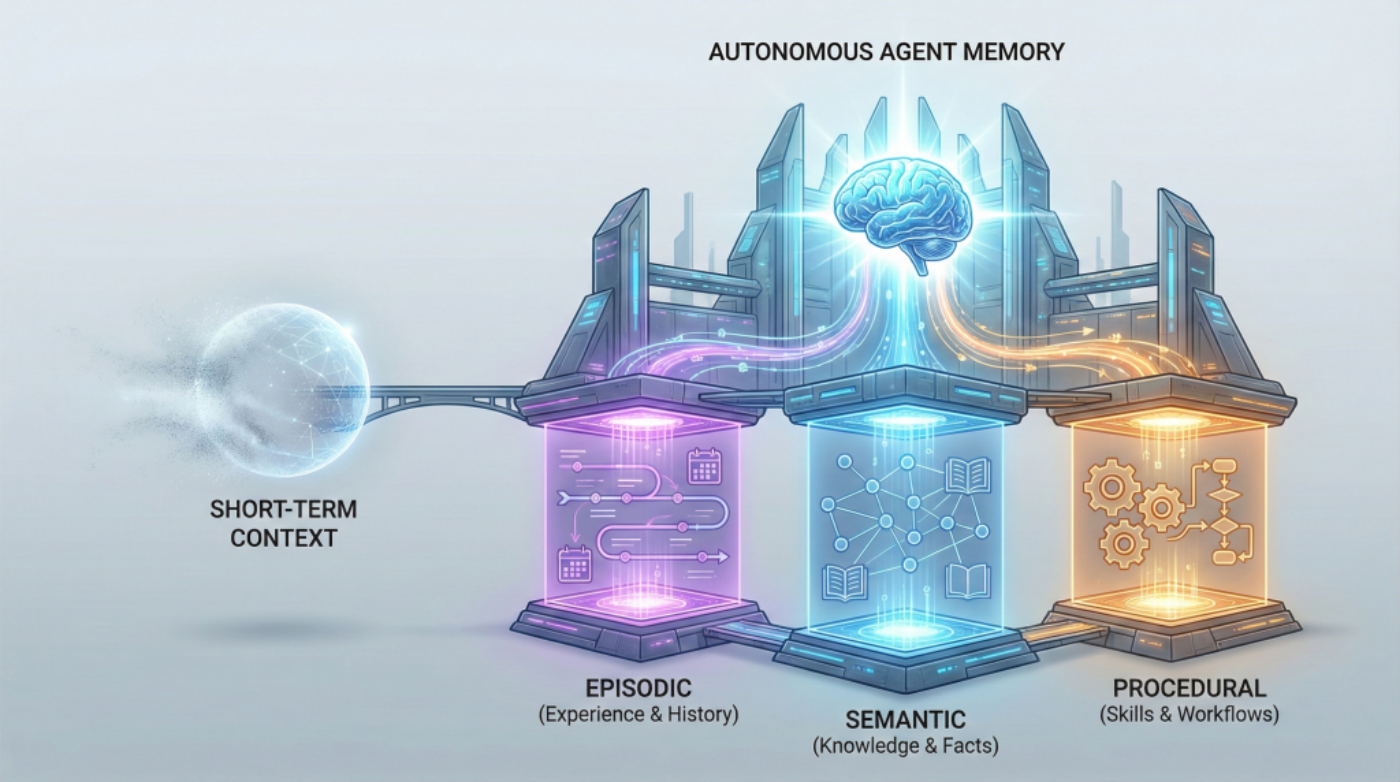

If you've built chatbots or worked with language models, you're already familiar with how AI systems handle memory within a single conversation.

This article is divided into six parts; they are: • Pipeline Parallelism Overview • Model Preparation for Pipeline Parallelism • Stage and Pipeline Schedule • Training Loop • Distributed Checkpointing • Limitations of Pipeline Parallelism Pipeline parallelism means creating the model as a pipeline of stages.

Predicting the future has always been the holy grail of analytics.

This article is divided into two parts; they are: • Data Parallelism • Distributed Data Parallelism If you have multiple GPUs, you can combine them to operate as a single GPU with greater memory capacity.

This article is divided into two parts; they are: • Using `torch.